Memory Device

3D Animation | Experimental Dance Performance

Memory Device is an attempt to combine art with technology such as 3D modeling animation, VR interactive, modern poetry, experimental world music, and dance performances, etc. The video presented here is the first chapter of Memory Device: Wake up, Singing for Memory. In this chapter, we choose a representative video clip that combines experimental music, poetry, and dance performance to present to the audience.

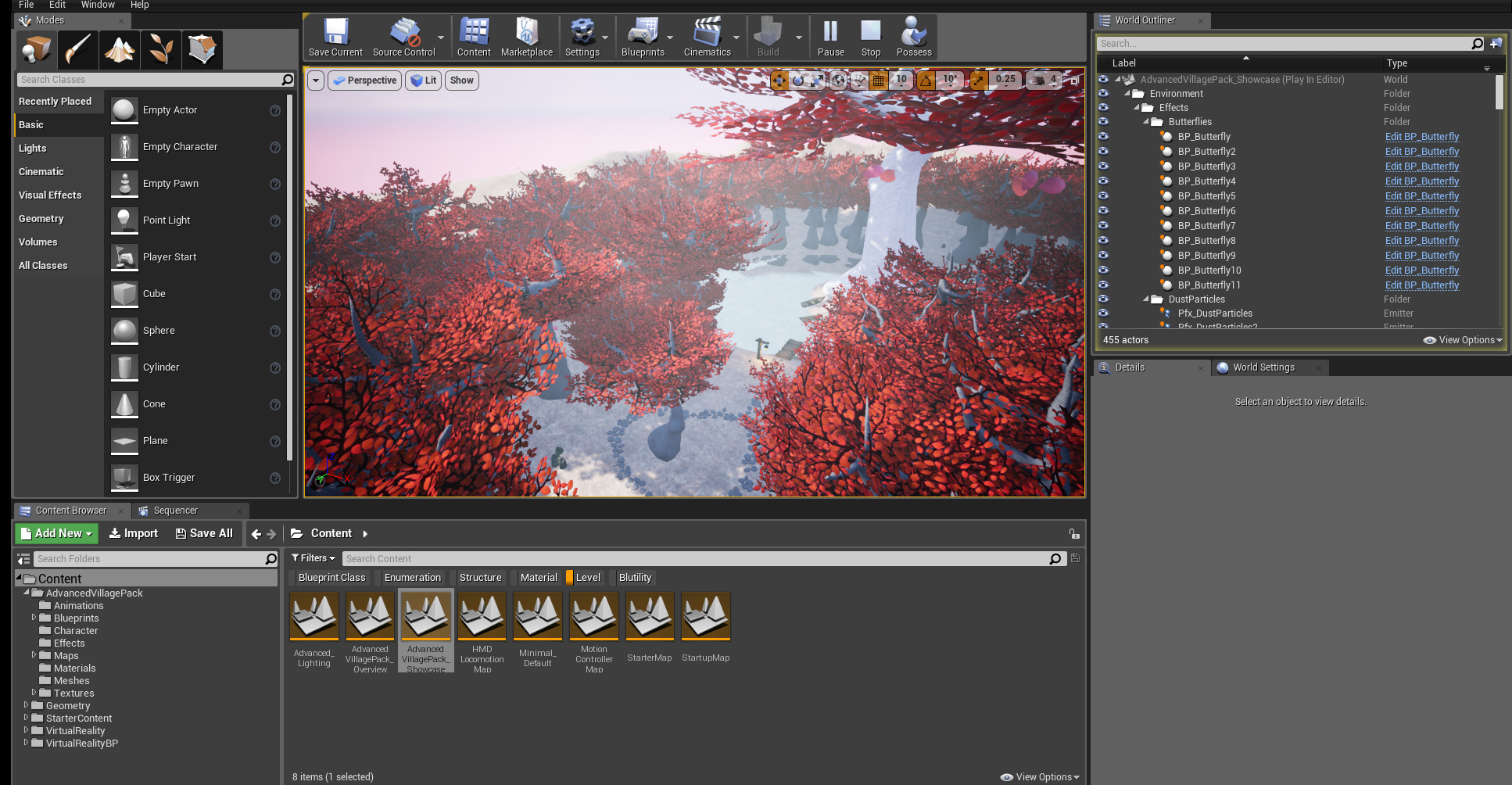

Technology

Unreal Engine 4 / Motion Capture / Maya

Time

2020

team

Sicheng Wang/ Shimin Gu/ Shuting Jiang/ Lane Shi Otayonii.

Role

Technical Director / Environment Artist

It is an endeavor that makes a poetic expression of the new media. We get inspiration from a breakthrough point of our thinking on different approaches to using media and technologies. We base on the sense of distance and distortion of existing 3D modeling to build a cold and alienating virtual world. Combining this sense of coldness and alienation, let unidentified characters wander in the virtual world and talking about their hometown tenderly. Through dialogue between virtual characters, monologues, and poems, our confusion about the world, the experience of large-scale migration based on globalization, and the thinking about information technology are presented one by one.

Island

3D Animation | Motion Capture | Live Dance Performance | NYU IDM Thesis

What does solitude mean to you? What are the possibilities motion capture could bring to psychological studies?

I found that although a lot of people would choose solitude sometimes, a lot of their solitary experience starts from painful situations and they actually couldn’t fully enjoy it. The piece is about how to take the opportunity solitude provides to turn a negative state of being into a positive one.

The whole performance is divided into four chapters, escape dissociation, choice, and solitude. Pain and struggle are shown at the beginning and middle and the journey ends up with peace, which forms a nice narrative curve.

Technology

Motion Capture / Motion Builder / Maya / Houdini / Premiere

Time

2019

Performer

Sarah Amores

Advisor

Toni Dove / Mark Skwarek

Verbal language, facial expressions, and body movement can all be used to express emotions, but body language is more direct and unable to conceal compared to the others. Dance is an art that includes more overdramatic movements than does daily life so that it will have the power to ‘‘speak’’ directly to people.

Idea

With urbanization, more and more people tend to live in cities, and escaping to nature is sometimes not easy, either because of the costs or because of transportation challenges.

Also, technological progress profoundly affects the human experience, as well as solitude. By the widespread use of social media and the perceived pressure to always be online, spending time alone has become increasingly difficult. But technology may also foster solitude because it could actually reduce the time and energy people need to spend on human interactions. Meanwhile, how we spend our solitary time also changes.

It’s a practical problem for all of us to think about: How to enjoy solitude in our stressful urban daily lives with so many constraints?

Inspiration

Bodies in Motion

The motion of human bodies can express a lot of inner feelings, and how the performers interact with their surroundings(which can be virtual world or physical space) can reflect inter-human or human-society relationships, which might be a good way to express my idea.

Physical Installations

The motion of human bodies can express a lot of inner feelings, and how the performers interact with their surroundings(which can be virtual world or physical space) can reflect inter-human or human-society relationships, which might be a good way to express my idea.

early test

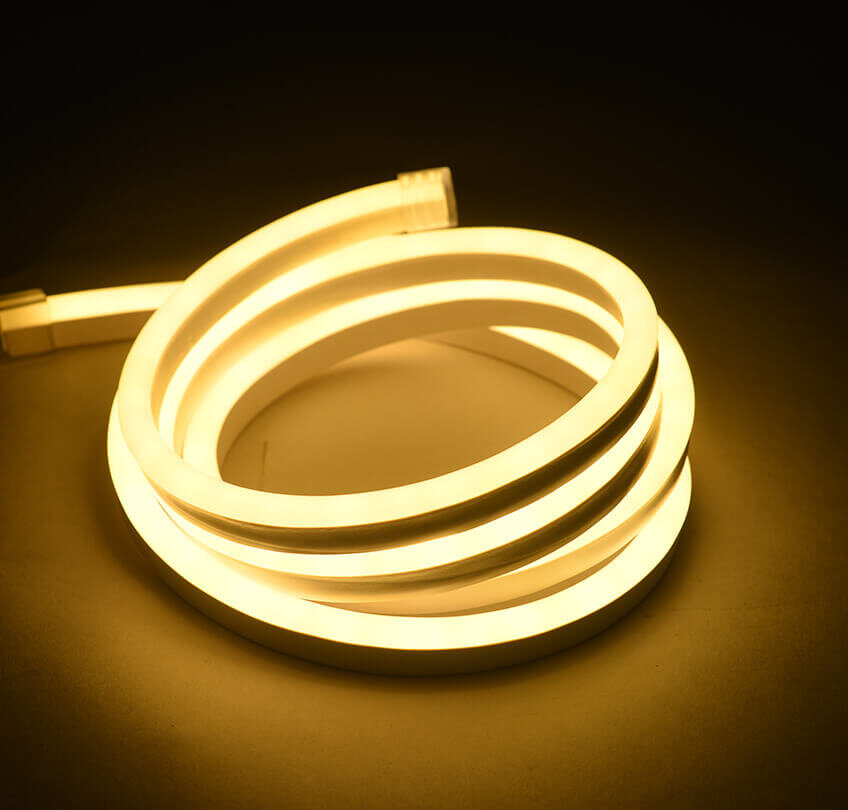

Since I decided to set a LED cube on the stage as the enclosed space for my dancer to perform. I was also thinking building an audio reactive LED system to add more interactions, but I’m not too sure about the final effect on the stage, so I began with building a small prototype first.

I went to Canal Plastic Center to buy some acyclic sticks and a LED shop to see the LED strips. I cut those acyclic sticks by even length and glued them up. To test the audio reactive function, I used a microphone sound detector as the signal input and hook it up with a LED light.

After trying to adjust the dial to change its sensitivity, I still feel the light flashes too much and it’s not appropriate for the show. Meanwhile, I don’t like the point light LED strips have. As the result, I bought some neon light strips to see if they look better.

For the cube, I bought some transparent PVC tubes and joints instead of acyclic because they are cheaper and easier to assemble.

Assemble

I brought those PVC tubes I bought to the Black Box and assembled them into a cube frame as the most important prop.

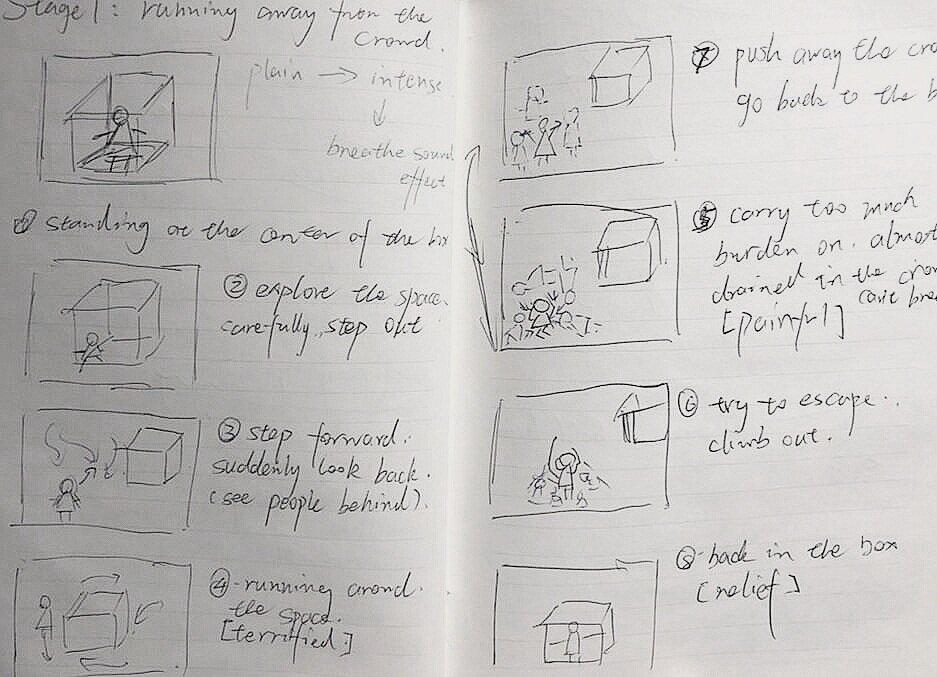

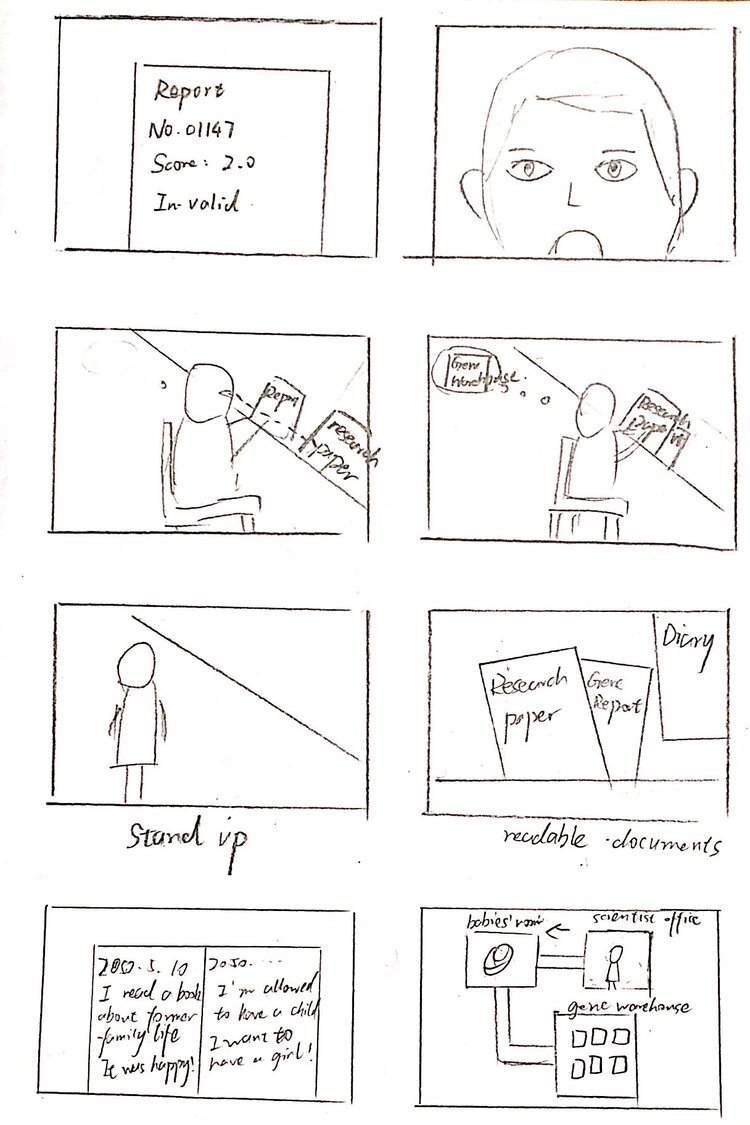

Storyboard

According to the four scenes I want to show in the performance, I created a storyboard as the guide of my mocap recording.

Sound

In order to help the dancer get a better sense of what I want at the recording, I found some loyal free songs online. I used a website called Epidemic Sound. It provides the service of searching by mood and movements which I found super useful.

Besides the music, I also want to add some sound effects like breathe and annoying ambient sound, so I found some on Freesound.

Recording

Before we started the formal recording. I played those soundtracks I chose and asked Sarah(my dancer) to free dance according to it. I see this part as a test for the accuracy of music as well as a chance to get some surprisingly good takes from the dancer’s perspective.

For the second part, I walked through my storyboard with Sarah and gave her instructions while she was dancing. For each scene, we took two or three takes as backup. My advisor Toni Dove also came by to the studio to help me with the process.

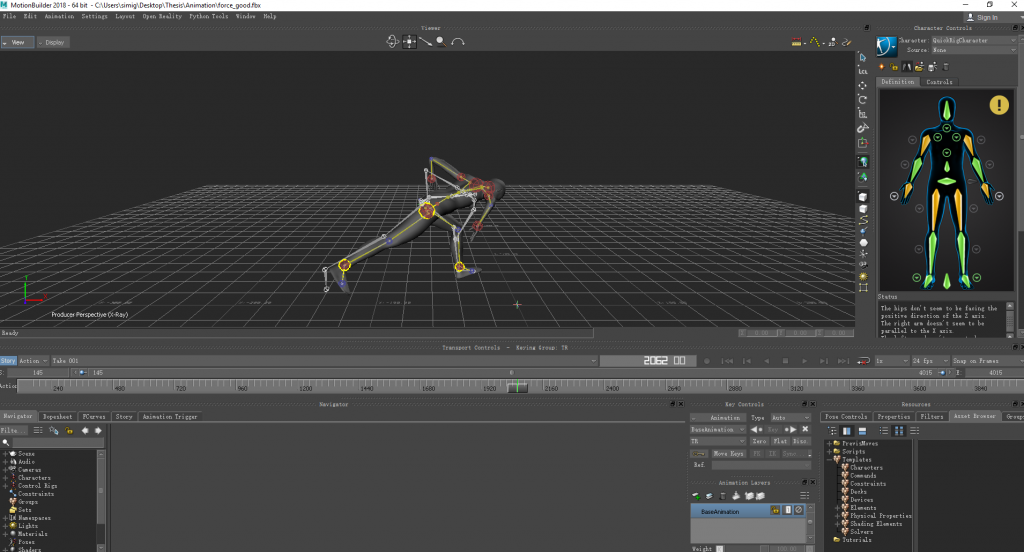

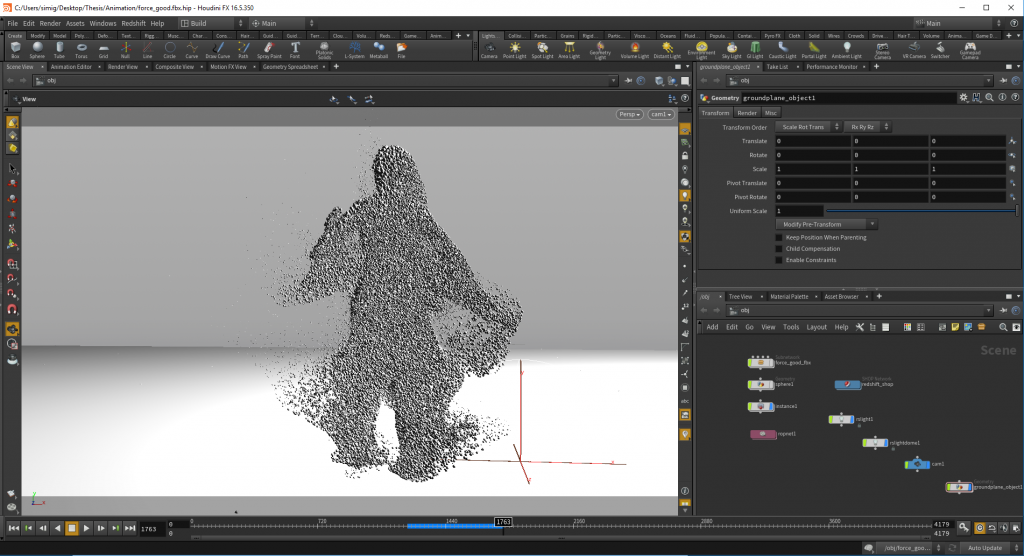

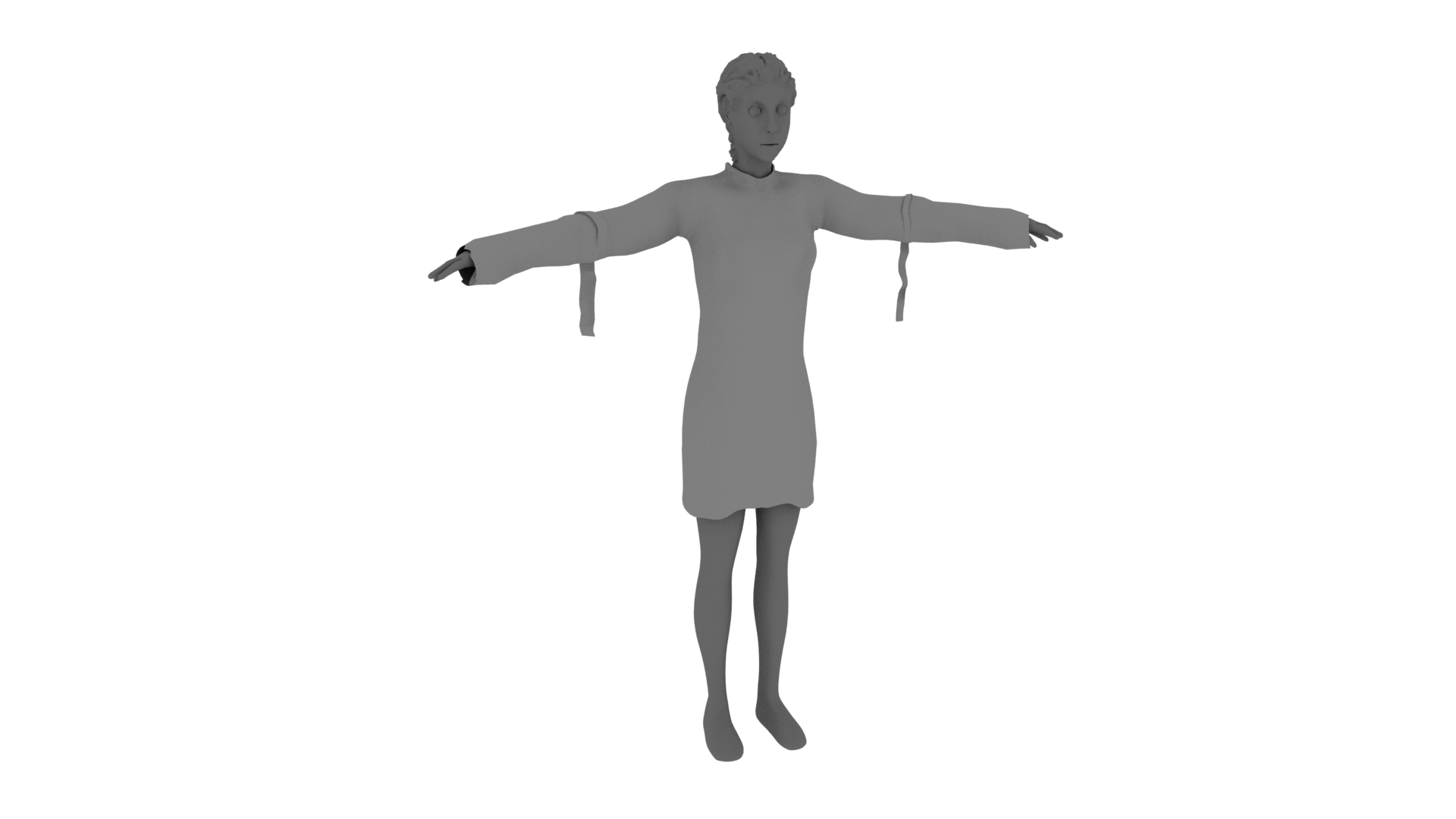

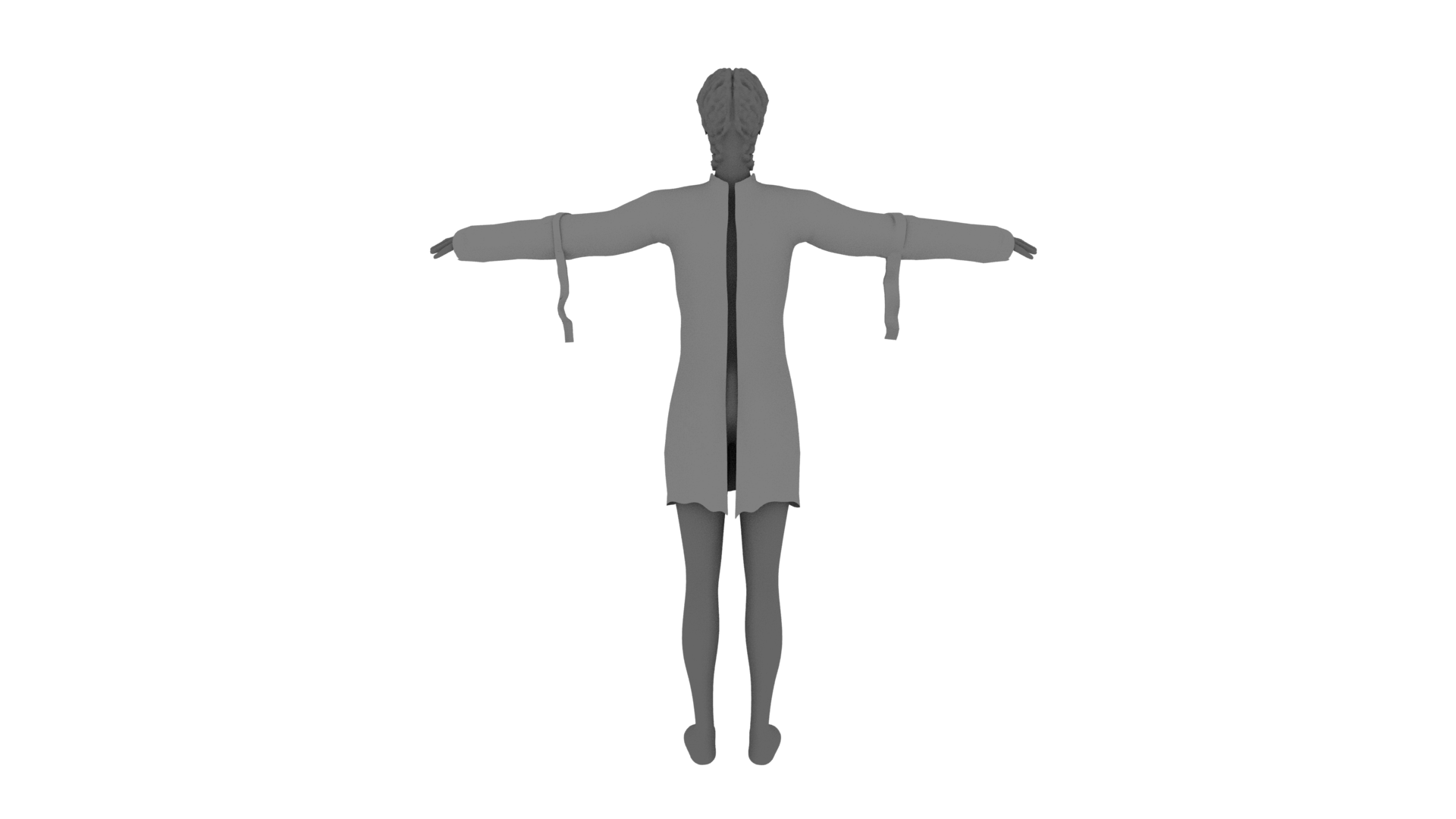

Render

I assigned the motion capture data to my rigged female model and baked the animation in MotionBuilder. Then imported the animation into Houdini to do the particle effects then rendered them out. Next, I imported everything into Premiere to do the editing.

Conclusion

Everyone’s solitary experience is different. Through the researching process, I found that some people enjoy solitude because they love the freedom, the quiet, or the efficiency solitude could bring to them, but most people’s solitary experiences start from unpleasant emotions. They see solitude as a way to temporarily escape from reality. Escaping is not the ultimate way to solve problems, but it does give people time to take a breathe and recover from pain. We should respect it. I know it’s hard, but we should try to take advantage of the opportunity urban solitude offers to turn a painful state of being into a positive one. Maybe we can’t change the outer condition a lot, but we could transform ourselves from the inside. Also, I want to mention that, sociability doesn’t conflict with solitude. A person would need social contacts as well as time to be alone.

Next Step

For this project, my participants and the amount of academic research I conducted were limited, so the final result might be inaccurate and subjective. A lot more study is still needed. After the performance, I run a feedback session and got a lot of valuable suggestions. The project would be greater if I had the chance to more iterations.

Also, I’d like to keep exploring the use of motion capture not only as recording data for games and animations but also as a format of performing art. In the future version of the project, I would like to do the motion capture part live and get the audience involved in the performance, which might enhance the experience.

With the future development of human technology and culture, the format of solitude may keep changing, so dreaming about that is also interesting.

AAOX3

Live Motion Capture | Dance Performance | Project Mapping

AWAKE, ALERT, and ORIENTED to PERSON, PLACE, and TIME.

AAOX3 weaves together an amalgamated narrative about transformation to create a live Motion Capture experience that stretches the intimacy of performance to disrupt our moral expectations about violence within the virtual world.

In this piece, the performer confronts three Janusian representations of the self with the help of active audience members. This audience impetus provides agency to move the story forward from embodiment to empowerment. AAOX3 pushes the technical, musical, and emotive boundaries of Motion Capture technology, transforming the space into an experimental vignette about the inescapable pain of metamorphosis.

Team

Shimin Gu/ Fanni K. Farakas/ Asha Veeraswamy/ Tianyue Wu/ Nia Farrell (Performer)

Role

Technical Director

Technology

Live Motion Capture / UE4 / Nvidia Flex / Maya / Spout / MadMapper

Time

2018

Over the course of 7 weeks, 5 artists across disciplines developed AAXO3, culminating in a final Bodies In Motion Showcase at NYU Metrotech.

It was also invited to be shown at RLab’s opening.

Concept

In this piece, mirrors represent the window between physical freedom and virtual constriction. By facing a mirror physically, the performer must combat their mental illness virtually.

The three illnesses we chose to work with are schizophrenia, anxiety, and depression. Schizophrenia is represented by multiple shadow selves. Anxiety is represented by a claustrophobic tar bubble. Depression is represented by the crushing weight of water.

Motion Capture Interaction

The audience is invited to help the performer face their fears; they are given the agency of when and how this happens through a mirror that can be rotated.

This mirror is a camera in the virtual world. The story is paused when mirrors are facing away from the performer. The fears start to dissolve. The story moves forward when mirrors are facing the performer. The fears start to consume.

Model

Story

Teaser

Garou World

VR Multiplayer Publishing Platform | Interaction Design | UX Design

Garou is a publishing platform & marketplace for amazing VR experiences.

A place where people can physically go visit, interact and transact. A registry of VR experiences that uses real-world geography as the primary device for navigation.

Company

Garou, Inc.

Role

Interaction Designer / Developer

Awards

Epic Mega Grants 2020

2nd Prize of Verizon ‘Built on 5G’ Challenge 2020

technology

UE4 / Amazon Web Service / Perforce / 3DsMax / Figma

Time

Joined since 2019

Ideation and Task Planning

Design System

PAIN POINT

The design was not consistent or user friendly

RESPONSIBILITY

Referencing existing VR design guidelines

User-centered principle, tailored design for VR

Branded color palette

Detailed annotation for developers

In-game Web Browser

Menu Design

PAIN POINT

The Avatar needs to be scalable and easy to navigate after the introduction of new avatars

VERSION 01

Limited avatar choices, not scalable

Male/female avatars are mixed

VERSION 02

Belt design, more scalable

Click the center icon to change gender

Not intuitive enough

VERSION 03

Carousel design, easy to understand

Multiple ways to navigate

Preview 3D puppet

Can only select preset avatars

NEXT VERSION

Customization

More color choices

Gender/race friendly

iNTERACTION DESIGN

PAIN POINT

There needs to be something more than clicking or touching a flat-screen for art installations in Guggenheim

RESPONSIBILITY

Interact with 3D objects

Give visual hints/cues as guidance

Haptic feedback

Function Design

PAINT POINT

We want to design an annotation tool that allows users to paint in space and leave messages for friends.

RESPONSIBILITY

Visual feedback

Increased user engagement

Users spend more time in it

EARLY SKETCH

OTHER DESIGNS

Craft

Inclusive VR Painting Solution | Inclusive UX Design

Designing for every person means embracing human diversity as it relates to the nuances in the way we interact with ourselves, each other, objects, and, environments. Designing for inclusivity opens up our experiences and reflects how people adapt to the world around them. What does this look like in the evolving deskless workplace? By saying deskless workplace, we mean people who aren't constrained to one traditional or conventional office setting.

Goal: Design a product, service, or solution to solve for exclusion in a deskless workplace.

Since we are all designers and we are in a program that involves various artists, we connected with the idea of solving for artists with limited hand mobility and trying to create content in VR. Our professor — Dana Karwas and Nick Katsivelos from Microsoft encouraged us to work in this area since not much has been done.

Team

Shimin Gu / Cherisha Agarwal / Joanna Yen / Pratik Jain /

Raksha Ravimohan / Srishti Kush

Role

Technical Director / UX Researcher

Technology

UE4 / Oculus Rift / Sketch

Time

2018

Showcase

Problem Statement

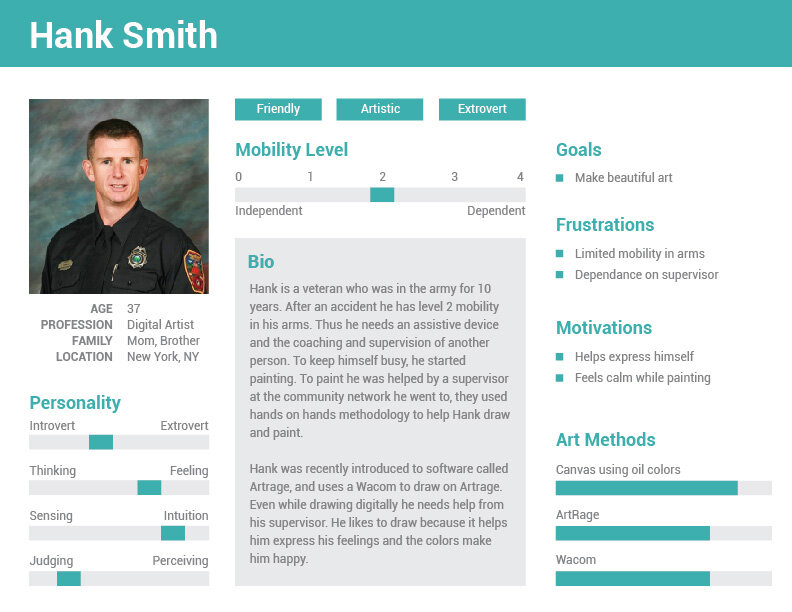

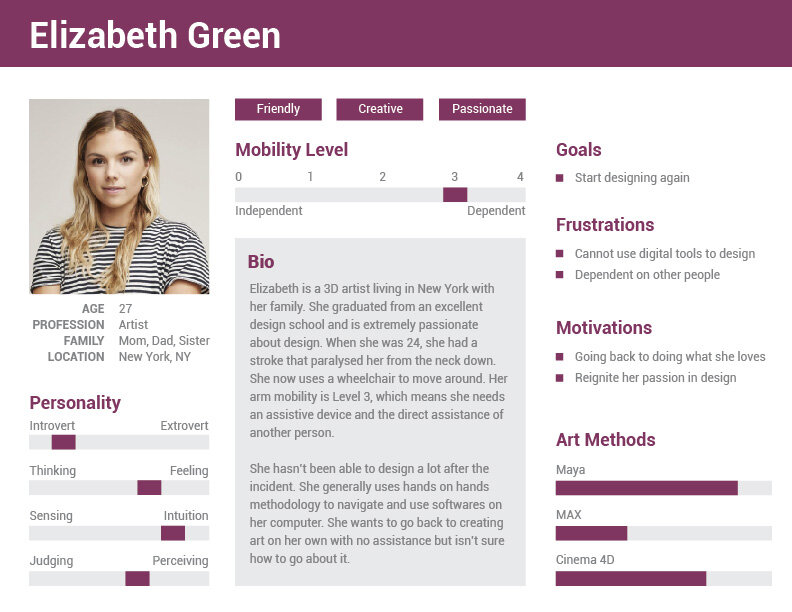

How might we build a multi-modal tool for people with limited mobility in their arms to create art in Virtual Reality?

Initial Research

Approaching this idea was not an easy task and it involved us talking to a lot of designers at IDM and understanding their workflow. We spoke to them about how they use software and the potential problems that people with limited hand mobility would run into. We were very fascinated by the idea of creating a multi-modal application that uses eye-tracking and voice commands to enable people to draw in VR. To dive deep into the technology and understand its use, we read a few papers listed here and got great insights from professors at NYU like Dana Karwas, Todd Bryant, Claire K Volpe, experts in UX research like Serap Yigit Elliot from Google, and Erica Wayne from Tobii. Every person we spoke to gave us more insights to tackle the challenge at hand from a user experience, VR technology, and accessibility perspective.

Interview

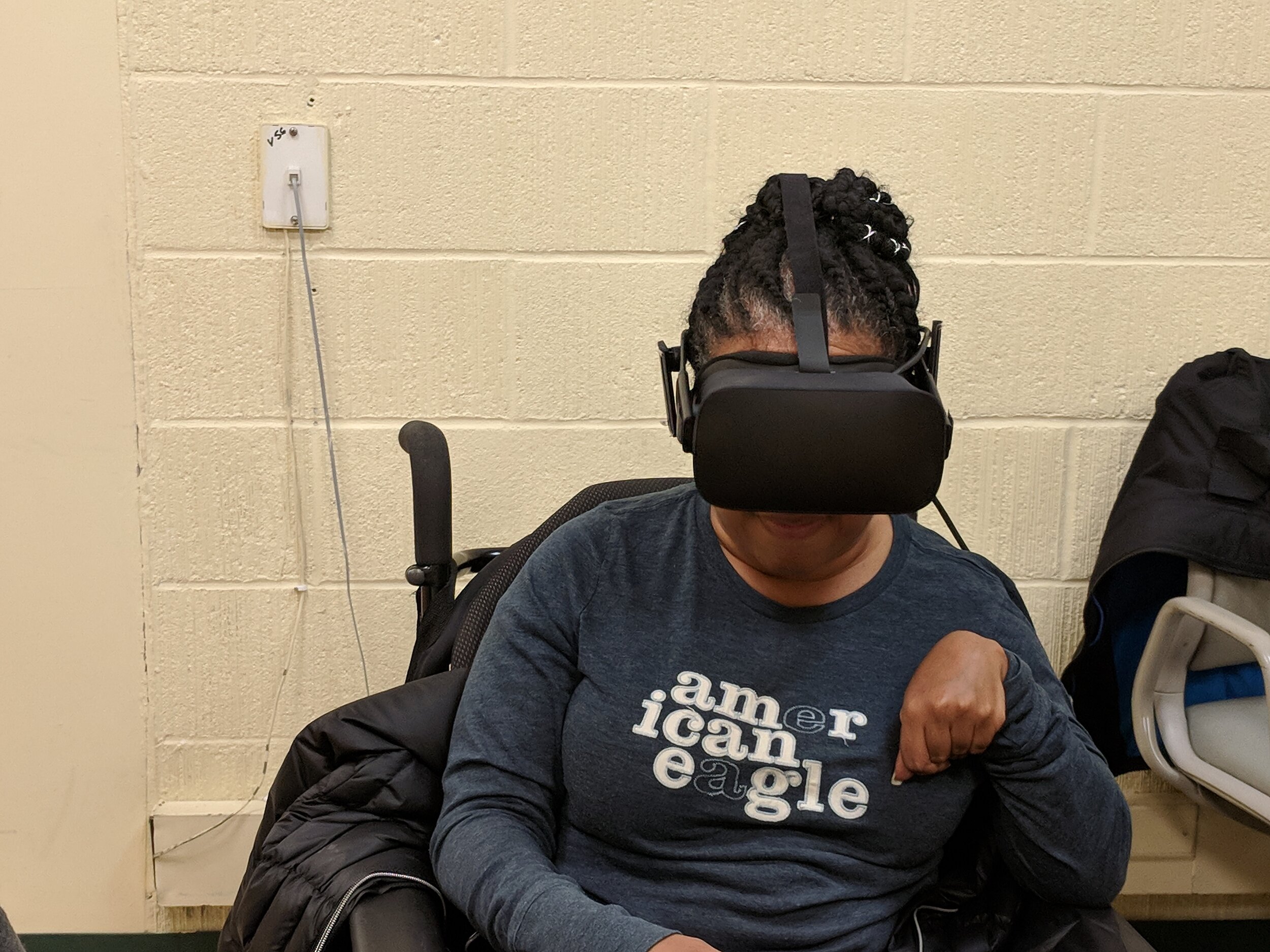

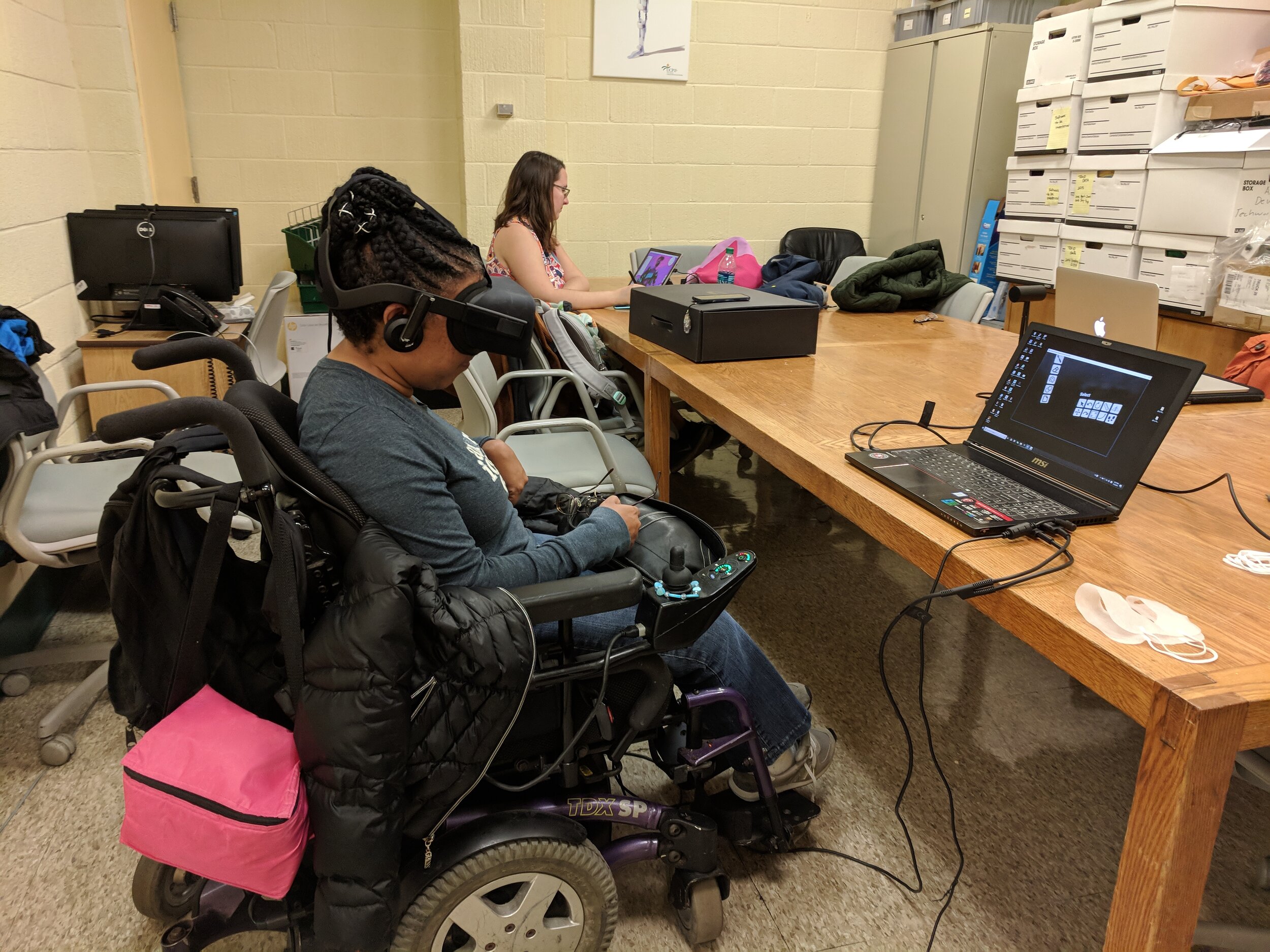

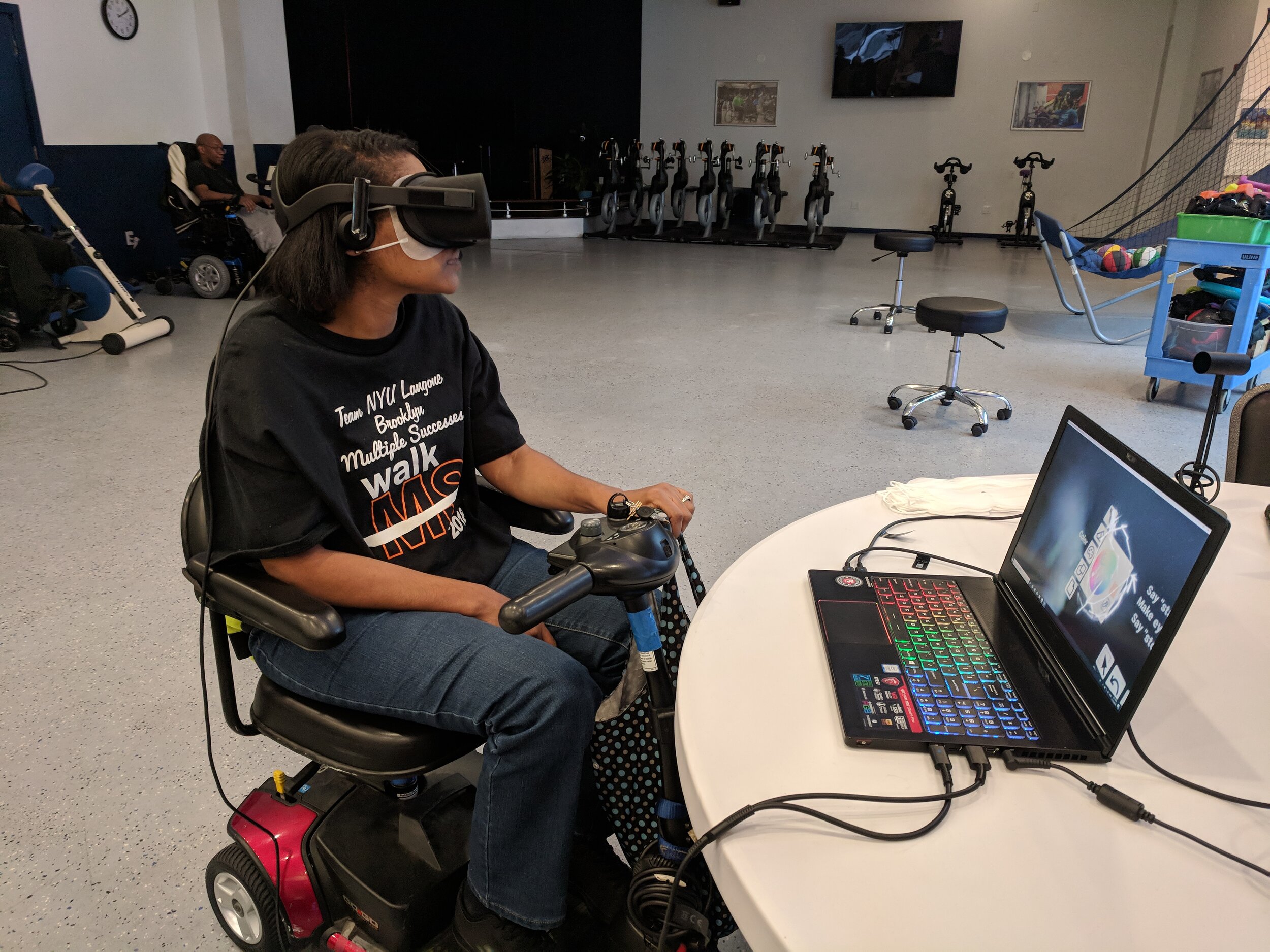

We had conversations with 115 individuals to validate our assumptions. We tested our prototype with potential users who face mobility issues. We also spoke to accessibility experts and disability centers to get a sense of how they would invest in our product to benefit their community.

PEOPLE WITH DISABILITIES

Paralysis

Cerebral Palsy

Multiple Sclerosis

Spinal Cord Injury

ORGANIZATIONS

Hospitals

Senior Homes

Disability Centers

Accessibility Research Centers

EXPERTS

VR Prototypers

UX Designers

3D Modellers

Accessibility Experts

Therapists

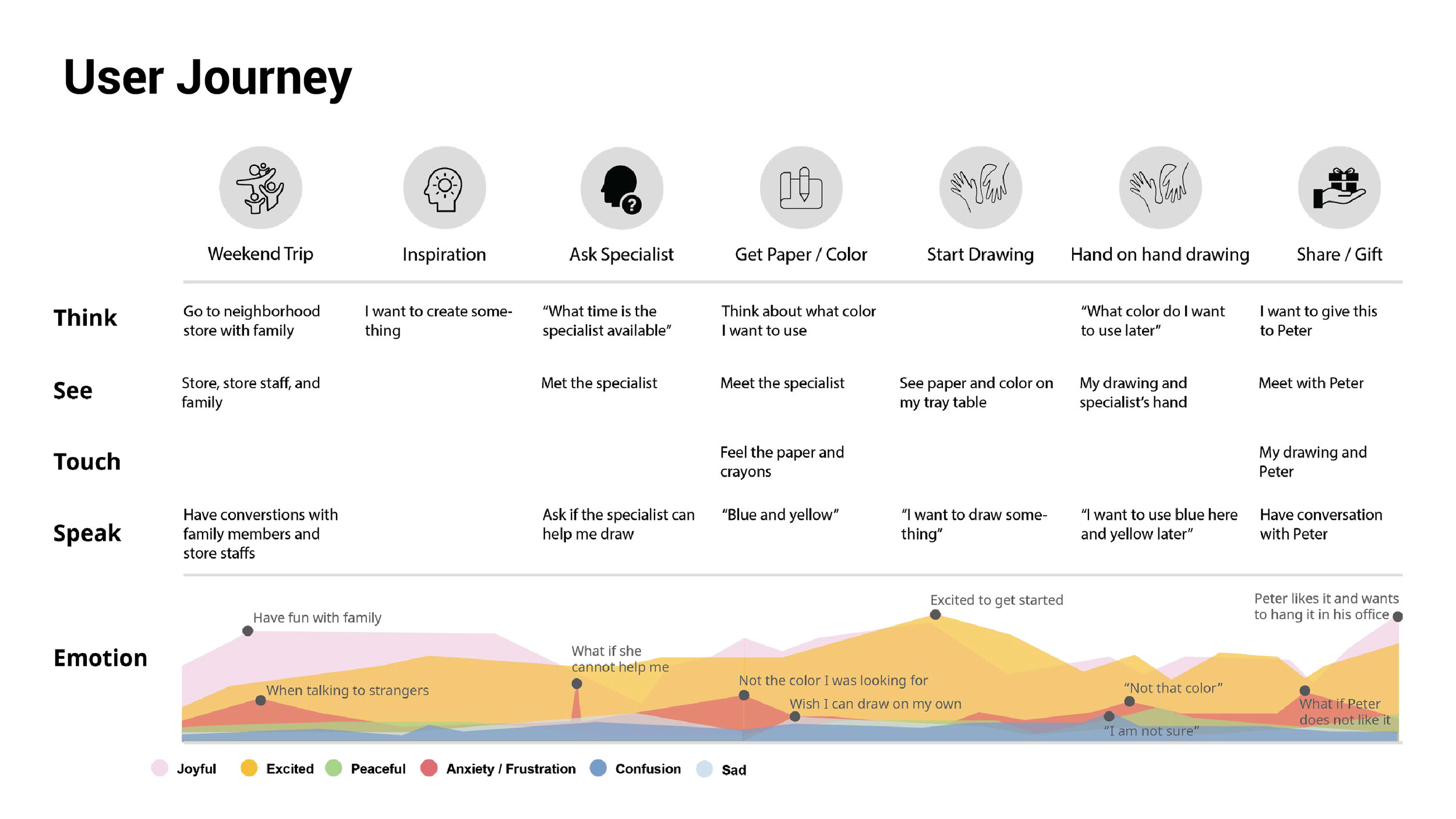

User Persona and journey

Low-fi Prototype

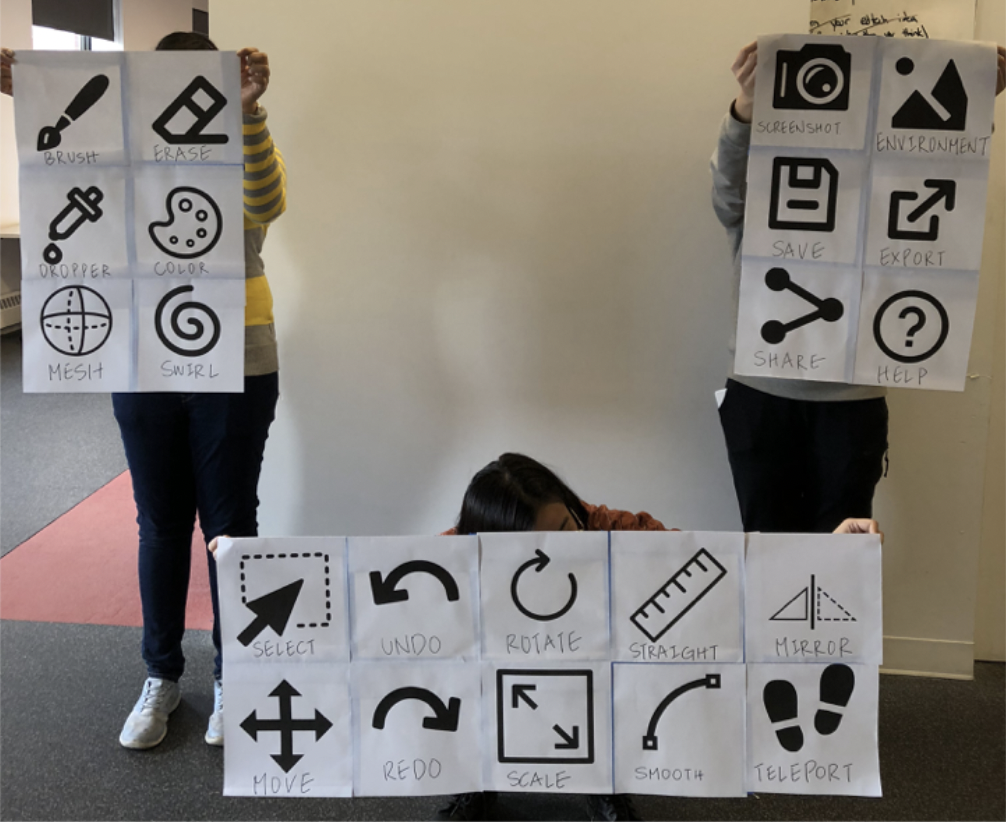

After extensive research, we decided to get our hands dirty and build our very first prototype. How do we make a prototype for an art tool in VR? We figured out that the best way was to convey our interaction is through a role play video. We did some rapid prototyping using some paper, sharpies, and clips to bring our interface to life.

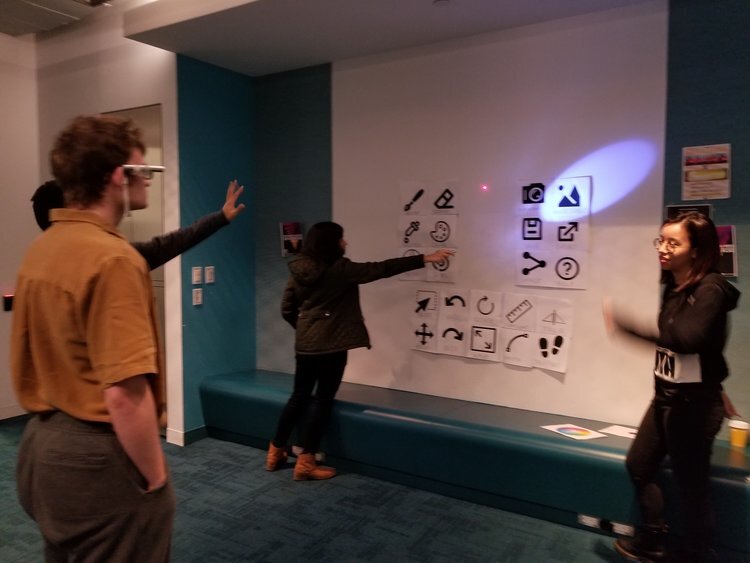

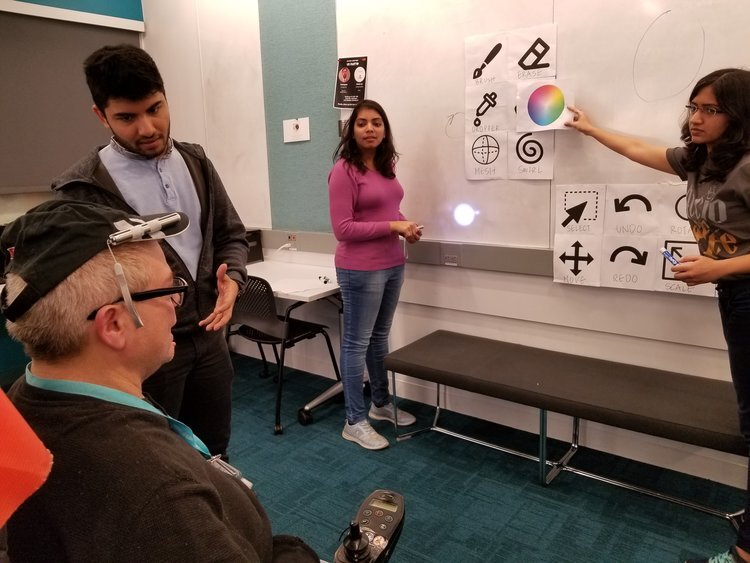

Paper Prototype and User Test

Once we got insights and a whole lot of motivation from our users, we decided to give a structure to our interface. This time, we printed our tools on paper and stuck them together in an organized way. We used a laser pointer as the “eye tracker” and we were all ready for our second round of user testing. The whole process of prototyping for VR was very interesting and this process made us think clearly about details of the interactions and potential issues.

Our second round of user testing opened us to two kinds of scenarios based on prior experience. The users who had experience with using design software could complete the tasks well and pointed potential issues with the concept. Some of the issues were with figuring out the z-axis, feedback for interactions, adding stamps, etc. The users from the ADAPT community who did not have prior experience wanted the tools to be less ambiguous and some of the tools felt unnecessary.

Taking cues and learning from responses, we went to make the hi-fidelity prototype using the Unreal engine. Unreal is a good tool to build Virtual Reality content. To make a working prototype, there are several tasks we needed to accomplish which are as explained below:

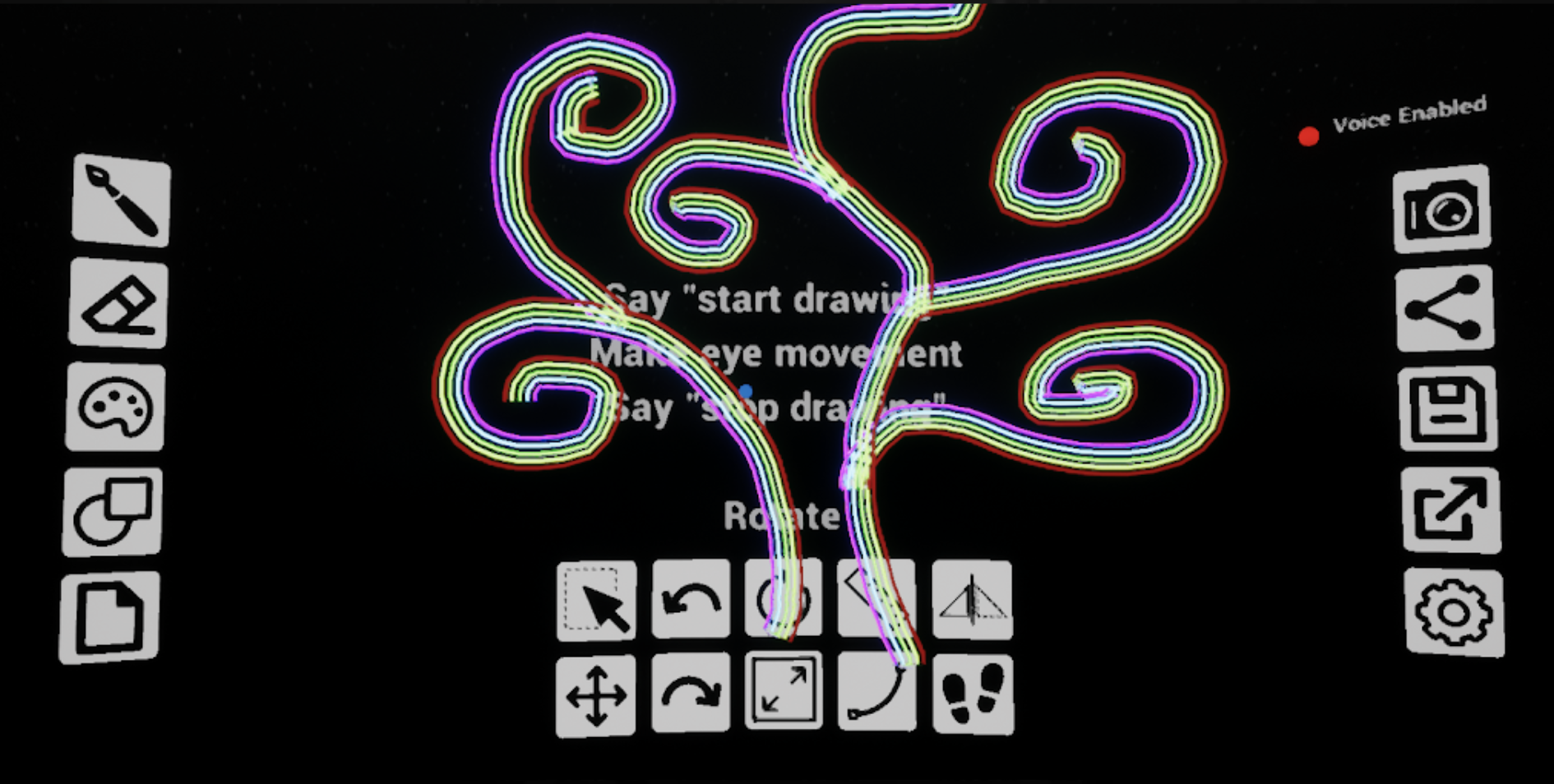

High-fi Prototype

The final prototype was done using Unreal and had the functionality for drawing using head movements, changing the stroke, changing the environment and teleporting.

The most basic function of an eye-tracking painting tool in virtual reality was realized in our prototype by using head movements. The user could choose tools, paint, teleport, and change the environment with our final prototype but it was still constrained by some technical limitations. Few functions like erase, undo, redo could not be realized by Unreal for now but we hope we can make them work by using other software and hardware. We also hope to look into the technology to enable eye tracking and make it possible to select the tools using the gaze movements to draw. In our current prototype, the voice instructions are manually monitored. We would like to include this functionality as well to make a multi-modal solution for our users.

User Test

Next, we went out to meet potential users from the Adapt community. Adapt formerly known as — United Cerebral Palsy of New York City, is a community network is a leading non-profit organization in providing cutting-edge programs and services that improve the quality of life for people with disabilities. There we got to meet Carmen, Eileen, and Chris who were very interested in painting and loved the idea of drawing in VR. This kind of experience was new to them and to our surprise, we got good feedback from them. All three of them expressed interest in trying out a tool that enabled them to draw using eye movements and voice commands.

Drugmatic

VR Experience | Reality Virtually Hackathon 2019 | MIT Media Lab

Drugmatic is an educational VR immersive experience to help teens fully understand the effects of drugs. Based on the research of true feelings after taking drugs, teenagers will have a taste of the illusion. During this experience, they will also have chances to make a change and to stop this dramatic wired and fake world. Once a decision is made, there is no going back. Fantastic or horrifying, they have to go through the entire illusion by themselves. By the end of this experience, they will realize the unpredictable result of drugs.

TECHNOLOGY

UE4 / Tiltbrush / Maya / 3Ds Max

Oculus Rift/ Leap Motion

time

2019.01

TEAM

Shimin Gu (Self)

Yunzhu Li / Shuting Jiang / Tianyue Wu / Wentao Pu

role

Technical Director

Award

Drugmatic was in Top 8 ‘Best VR’ Finalist of 105 Projects

INSPIRATION

Drugs have always been a serious problem, especially for the younger generation. Unfortunately, most teenagers started their journey with drugs because of curiosity. So how might we help them get away from drugs with respect to their curiosity and imagination?

What It Does

"Drugmatic" is a virtual reality drug experience focusing on teenagers. Based on the research of true feelings after taking drugs, teenagers will have a taste of the illusion. During this experience, they will also have chances to make a change and to stop this dramatic wired and fake world.

Once a decision is made, there is no going back. Fantastic or horrifying, they have to go through the entire illusion by themselves. By the end of this experience, they will realize the unpredictable result of drugs.

How We Built It

We started this project by exploring the VR drawing tools Tilt Brush and Quill VR in order to draw an illusion of drugs. With this VR experience, we tried to rebuild the scene where this event happened. Players could make their decision by themselves using leap motion.

BRAINSTORMING AND PROBLEM DEFINITION

In order to decide the concept, we did a brainstorming session. At first, we thought about several ideas but in the end, we made our minds dive into a drug experience.

RESEARCH

For us, it's very difficult to recreate an experience without a real reference. Thus we started our project by doing some research about what illusion would be like after taking drugs. We looked at some articles, videos and movies, decided to create the entire illusion world based on the research.

STORYBOARD

It took us a lot of time on the storyboard. We hoped our players could enjoy the fancy part of the illusion but also the scary side. It's very important to let our players know that the illusion of drugs can lead you to a horrible world with the scariest result you may never have a chance to regret.

STYLE AND DESIGN

During our research, we found out that there are a lot of common things of drug illusion such as higher color contrast, fluid and strange world, strange physical contact...These changes remind us of hand-drawing. We decided to use Tilt Brush and Quill to build the world and animations. We also wanted to have some interaction with our players in the illusion world.

PROGRAMMING AND EXPORTING ASSETS

This is the time when everything started to become messy. We watched a lot of tutorials, tried a million times how to export our drawings into Unreal Engine, learn how to build animations...The process is bitter but it's also a fun journey.

Challenges We Ran Into

Develop a solution to explore the world of drug effects based on research.

Integrate Tilt Brush assets into different graphics engines

Limit time and resources to create an educational experience that depends on high-quality art pieces.

Accomplishments that We are Proud of

Successfully bring Tilt Brush VR painter into the whole creation workflow.

Build an incredible illusion world.

Interaction

Natural hand movement instead of traditional controllers

Create your own illusion by hand drawing

Choose your own adventure

Incorporate taste and touch into own VR experience

What We Learned

Drugs may make you high for a moment, but the cost of the illusion will be extremely high, too.

New creation tools

Teamwork

Time Management is important

Some toolkits are not compatible!!

What's Next for Drugmatic

Refine the project by adding interactions and visual effects

Research more on drug effects

Playtest among teenagers

Reach out to schools, provide this new way for advisors and teachers.

Reach out to researchers and medical centers, provide our project as an assistant to help people get rid of drugs.

Leo and Barry

Interactive VR Experience | Narrative | 3D Animation

This is an interactive animation about the friendship between two robots in VR.

Time

2018

Technology

UE4 / Oculus Medium / Maya / Mixamo / Oculus Rift

Concept

I got inspiration from the puzzle/adventure game Machinarium. I really like its steampunk-like art style and I also want to create a warm story that happens between robots. The story is surreal but it’s related to friendship which should be heart-touching.

Story

The main character Leo is a small robot who read about the story about iron giant so he wanted to build one to be his friend but he needs a battery to start it. He happened to know another robot Barry nearby collected a powerful battery so Leo invited him to his house. Barry thought the battery was valuable and could be used as his backup battery since he didn’t have too much power left. If Leo wanted it, he had to do a lot of things to make Barry happy. After that, Barry promised to give Leo the battery but Leo realized that he just wanted a friend and he already had one. In the end, the two little robots were dancing in front of the giant.

Character

I created two small robots in Oculus Medium. After exporting them, I used Meshlab to reduce facets and then uploaded them to Mixamo to auto-rig and do animation. According to my story, I found all the animations I might need and download them into a folder for further use.

Leo

Barry

Environment Setting

I found a steampunk interior asset online but I felt the environment was too cloud and I didn’t like the yellowish color, so I rearranged the asset, changed its color tune by adjusting the parameters of the textures, and reset the lighting. I also added a post-process volume to avoid auto exposure which was annoying to me.

Orignal envrionment

After changing color tune and lighting

Animation

Since I planned to do an interactive piece, Barry’s animation should be triggered by user input, so I tested how to call animation by pressing the keyboard first. The difficult point is how to make every interaction according to logic.

Process in Unreal

Animation Map

Cast to animation map in level blueprint

Trigger by overlap and motion controller input

SOUND

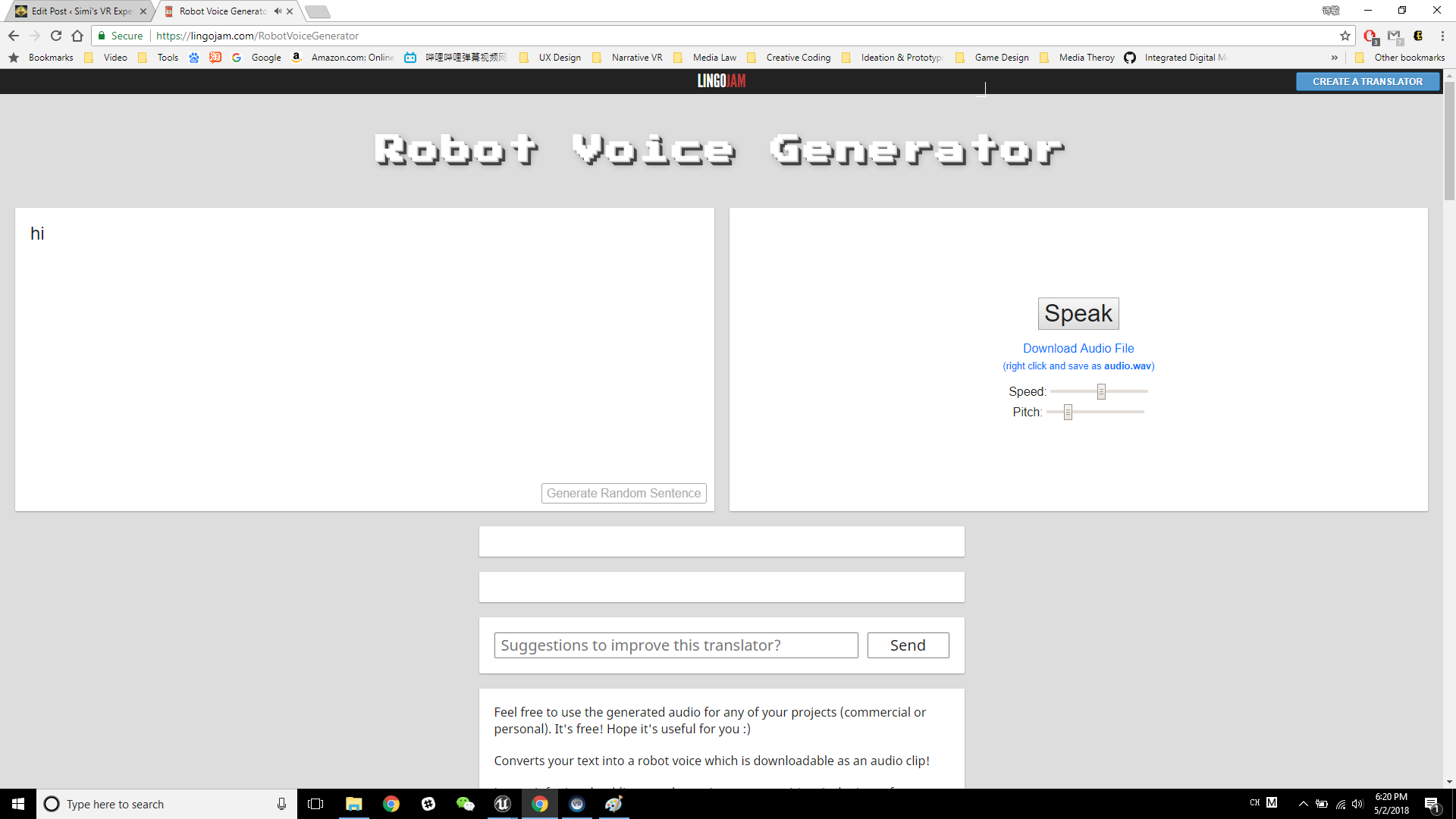

Generate robot voice

Robot voice triggered by user input and animation time

VR Animation: Two

Narrative VR Experience | 3D Animation

This is the first time I tried to tell a narrative story in virtual reality in Unreal. The short story is about loneliness and hope.

Due to a huge disaster, most elves who used to live in the forest have died or left so there are only two elves live in the forest now. The male little elf named Charlie keeps praying to the old tree for help. But the tree never responds to him. Another elf tries to comfort him but it doesn't work. Charlie is almost desperate, but he sees sunlight through leaves and still remains a little hope.

The audience will see the story from the perspective of the second elf.

Technology

UE4 / Oculus Medium / Maya / Mixamo / Oculus Rift

Time

2018

Idea

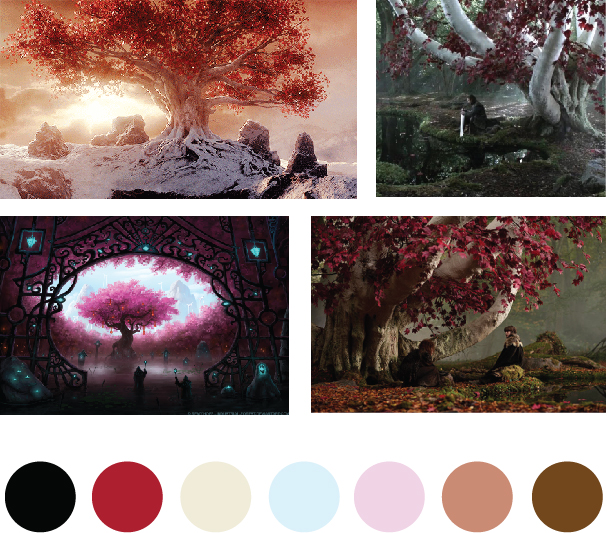

MOOD BOARD

STORYBOARD

Character

I decided to make my own character using Oculus Medium. Making VR in a VR environment is a very cool experience. I found a baby-sized body in Medium and used it as a reference, which is much easier than making it out of thin air for me. To rig more easily, I changed his position to the traditional T-pose. The cut little elf was named Charlie then! Then I uploaded the .fbx file to rig the character and add animations.

Environment Setup

This part is about Unreal. I bought one asset package in Unreal Marketplace. I think the lovely animation style matches Charlie. But the color and placement of assets was not what I pictured in mind so I changed the placement of elements and their color tune.

Animation in Sequencer

I use the sequencer to do the animation. The difficult point for me is how to make Charlie’s animations to be continuous. I also found it hard to control the movement of the camera. What we see in normal preview mode is always a little different from what’s in VR mode. And I had to control the camera to move smoothly in order to avoid discomfort in VR.

100% Human

Future Dystopia | 3D Stealth Game

Imagine our civilization 20 years from now. Things have either collapsed beyond repair or life has become a harmonious dream. Through some crazy combination of wormholes and string theory, you have the opportunity to create a piece of information that will be sent back to the current population.

What does your imagined future look, sound, and feel like? What message would you transmit to the current population from the future?

Technology

Unity / Photoshop

Time

2017

Ideation: Imagine a Eugenics dystopia

Moodboard

Eugenics tree

storytelling

Imagine what if we have totally cracked the secret of genes in the future? Maybe we will use it to generate perfect offsprings. What perfect meaning are higher intelligence, faster learning speed, healthier mental and physical conditions?

Because of this, our social system will change totally. It will become a military-constructed society. Everyone will be a vitro, so marriage will no longer exist. The government decides whether you can have a baby or not based on your personal genes and social contributions. Nature-borns are not allowed. When a woman gets pregnant accidentally, she will compulsively accept an abortion. If someone is allowed to have a baby, he/she will be distributed a good-matched sperm or an egg as well as a set of good genes such as high intelligence and a strong body. Although every baby is using the same set of good genes, it will show differently after birth. Those babies who can not reach the common standard will be eliminated in one month.

Picture from Gattaca

Picture from Fallout Shelter

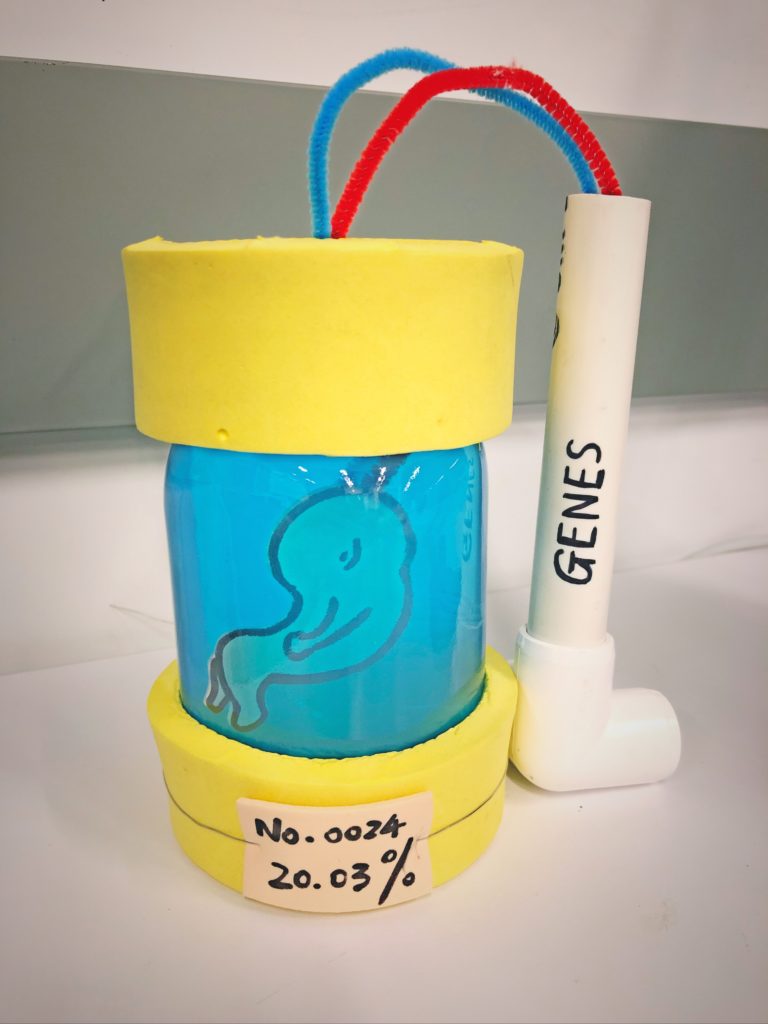

The main character is a male scientist who does gene research in the laboratory. He gets permission to have his own daughter. Usually, parents are not allowed to see their baby before the final gene report comes out, but the scientist has access due to his work. He accidentally sees his daughter’s serial number appearing on the “watch list”. Her gene index is only 20% now.

Although people at that time don’t have much family concept, the scientist doesn’t want her to be killed. According to his recent research, if he has other sets of good genes, he can inject them into his daughter to improve her index. He decides to steal them. There will be armed soldiers there guarding those genes. He must get them instead of being caught by the guards. Every time the scientist puts one more good gene into his daughter’s body, the index on the gene testing machine will increase a little. His goal is to recreate a 100% human.

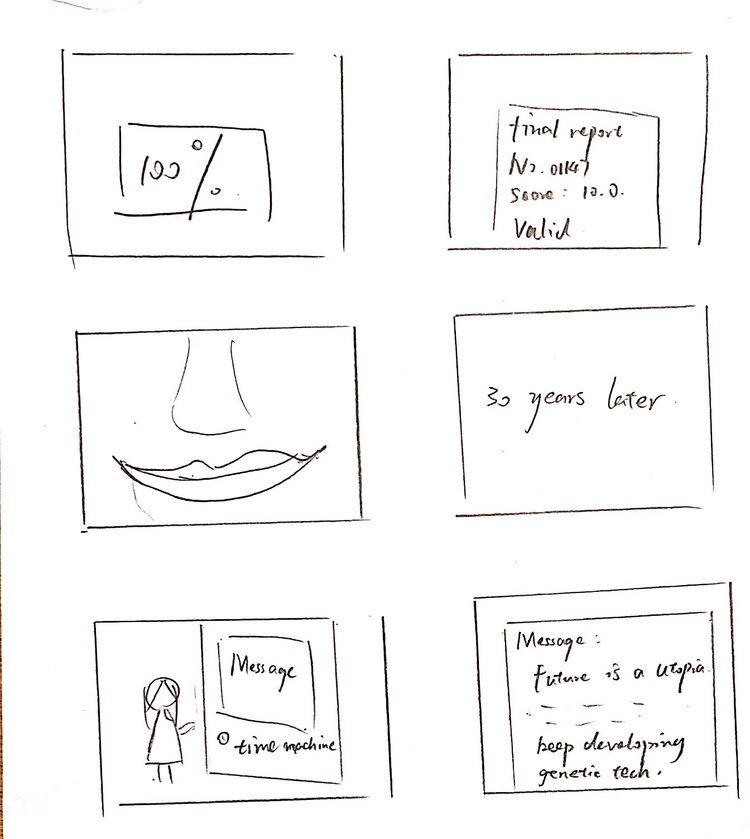

Finally, he succeeds. The girl passes the examination. Then, all the children will be uniformly educated, as parts of the state apparatus. They won’t see their parent often. In some sense, they are raised as “functional replicants”. Of course, the scientist never tells his daughter the story. Since she gets more good genes than others, she grows much more superior than her peers. Meanwhile, she is also more arrogant than others. She becomes the leader of gene supremacists in the end.

The message is sent by her. In her description, the future is a utopia. All humans are perfect, there will not be any deceases, crimes, or racial discriminations. She wants us to accelerate the gene research and carry out the principle of Eugenics because if we do so at present, their elimination work will be much easier.

It is an ironic ending but I think we can make our own judgment.

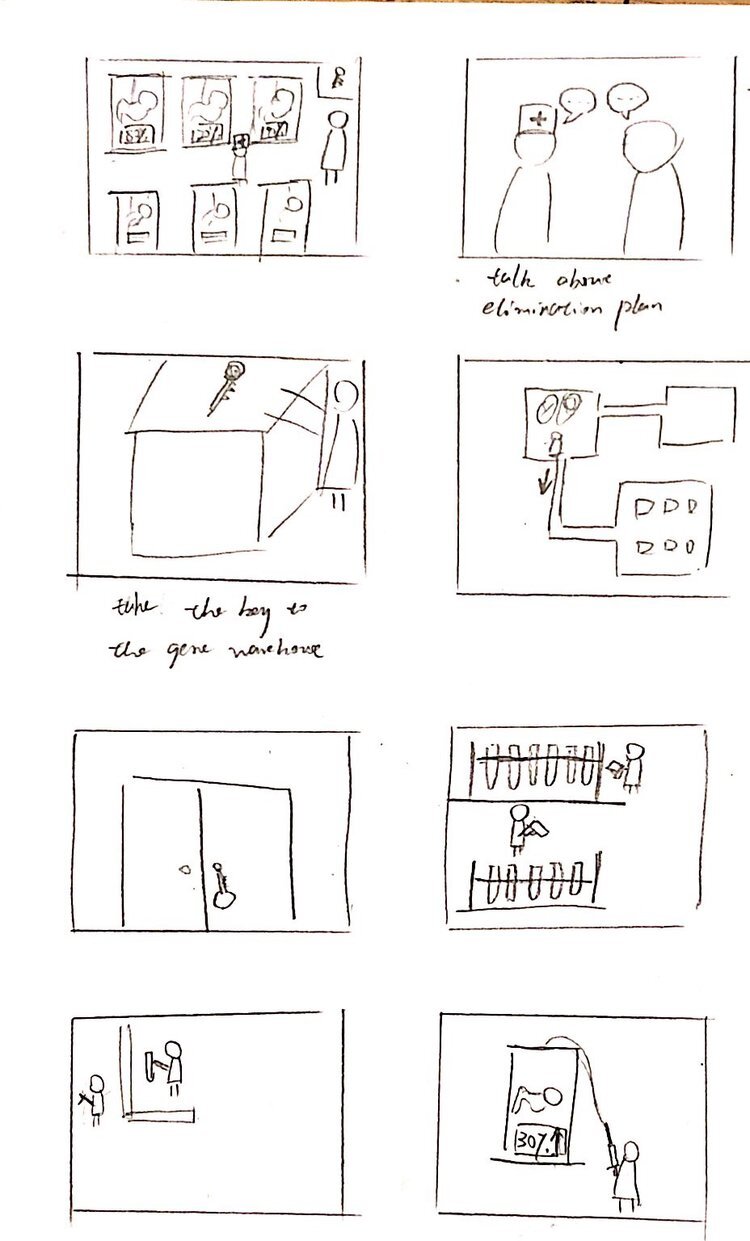

Storyboard

physical prototype and timeline

Timeline

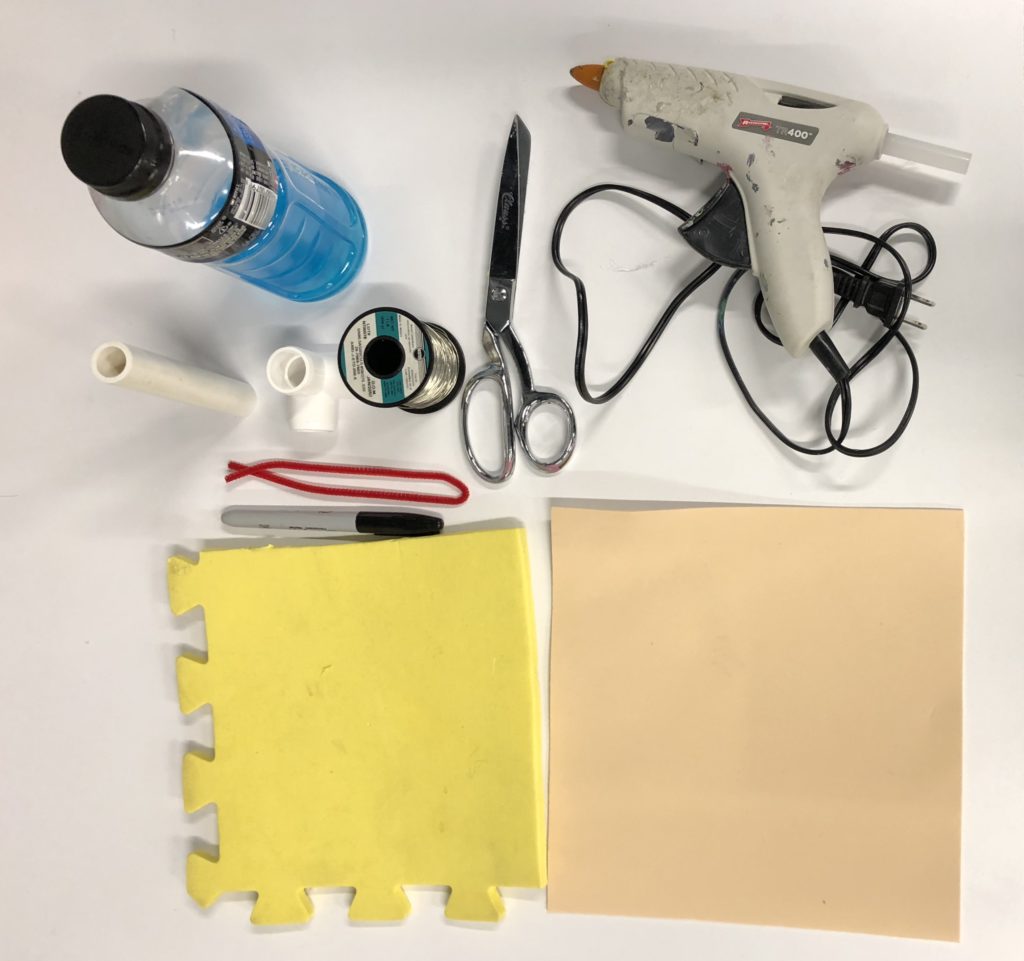

Tools

Physical Prototype

High-fi Prototype

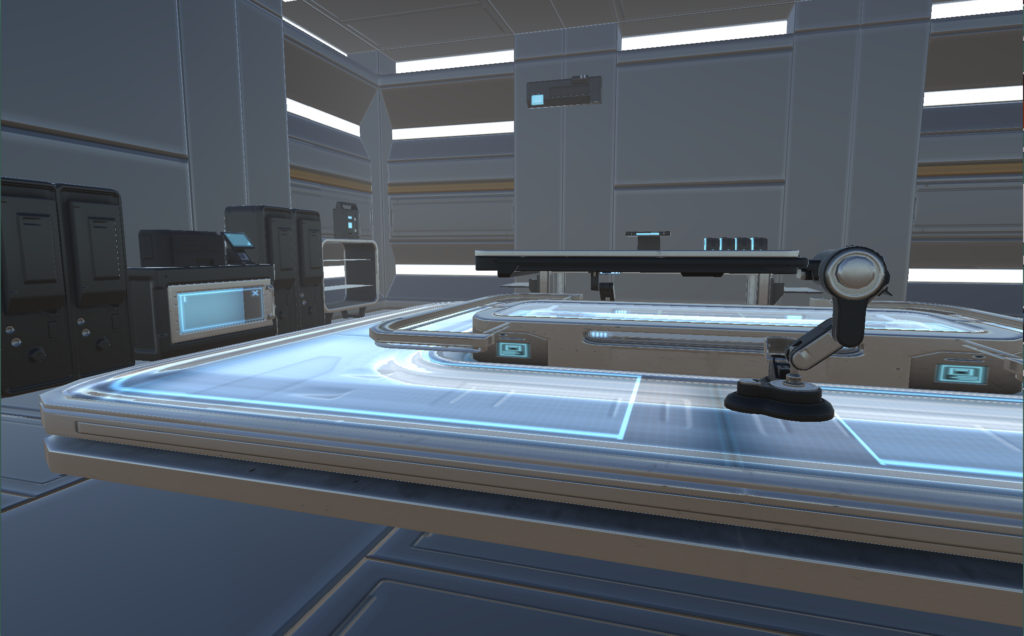

Based on my story, I build a 3D stealth game using Unity. The main character is the scientist whose mission is to steal genes from the warehouse without being killed by robot guards and then inject them into his daughter’s body.

In the first version, I put some basic props into the scene and create some simple functions. The players can walk, run, jump and use the mouse to change perspective. The winning condition is to get genes from the warehouse and go back to the nursery room, interact with a certain tube.

If the player is spotted by the guard, the game ends. I put one guard in the gene warehouse as the enemy. The biggest problem is the AI guard is rather “stupid”, it will sometimes go through models.

Also, because I haven’t put any background information into it, the player had a hard time knowing the purpose. The certain object player needs to interact with is not clear.

Then I iterate the game, using different scripts to control the guards. If they see the players, they will shoot and the alarm system will turn on. The Player has health, the shooting damage is related to the distance between the player and the guard. I also put one more guard in the scene to improve difficulty. And there are more props in the scene to tell the background information.

I changed the color of certain objects the player needs to interact with. The player can interact with some objects to see the detail. These are the narrative part and also as the instruction for what you need to do. Also, put background music into it to create an intense atmosphere.

Here is the game playing process (success)

The successful ending of the game is ironic, the scientist saved his daughter but she then became the leader of Eugenics. The message is sent by her, Lady S. She describes the future as a utopian world and encourages current people to keep developing DNA technology. I create a news webpage to show her message.

Although she describes a utopia world, my real concept is to criticize on this kind of Eugenics and show a dystopia.

Social Class

2D Narrative Game

This piece reflects the problems with social classes. Try it on Github

Technology

P5.js / Photoshop / Handdrawing

Time

2017

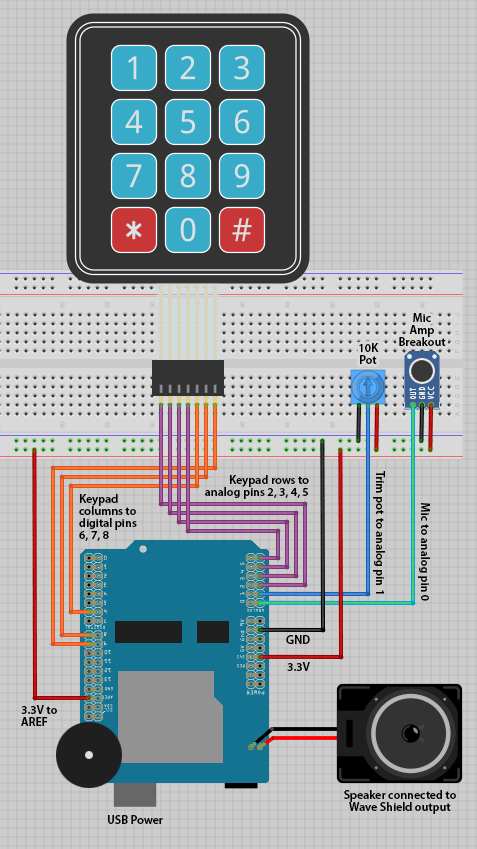

Voice Chamber

Wearable | Physical Computing

A way to help you understand/get out of social isolation and loneliness

Social isolation and the feeling of loneliness is commonly existing among human beings which could cause health issues. The reason behind it varies and there is no general solution that fits all. Social isolation and loneliness are hard to measure and don’t have any clear standards. People who have frequent social activities may still face these kinds of issues. Also, sometimes people even don’t aware of or feel afraid of facing it. Through this project, I’d like to inform people this kind of situation is a common thing instead of something you should hide or be ashamed of. Hopefully, it could help reduce the pain and health outcomes social isolation may cause.

Technology

Arduino / Adafruit / Soldering / Lasercutting / MaxMsp

Time

2018

RESEARCH

Since social isolation and loneliness is a very human-centered topic and everyone’s situation varies, so I decided to design an initial survey first to get a basic sense of people’s attitude towards social isolation and loneliness, have they ever felt that, as well as getting their demographic information and contact information.

The link to the survey is: https://goo.gl/forms/c5qM5Zl2ClFrXMK33

INSIGHTS

The result is a little bit surprising that most people described that they were socially isolated or felt lonely before and think social isolation or loneliness is a neutral thing, which corresponds to the opinion I want to express through my project: You don’t have to feel bad about social isolation and loneliness. Meanwhile, more people think social isolation is a kind of feeling instead of an external state, which does not correspond to the academic definition of social isolation. When I designed the survey, I didn’t define social isolation anywhere intentionally because personally, I don’t totally agree with the ‘official’ definition. I’m not sure I was right or not, but just want to know people’s opinions about it.

CONSTRAINTS

For now, I got 24 responses to my survey and the age group is centered on young grown-ups. The number is too small and the demographic information is not varied enough. Also, this time I didn’t ask any deep questions about the reason or ask them to share some stories because I think it would be more efficient to talk to somebody in person to get these kinds of information.

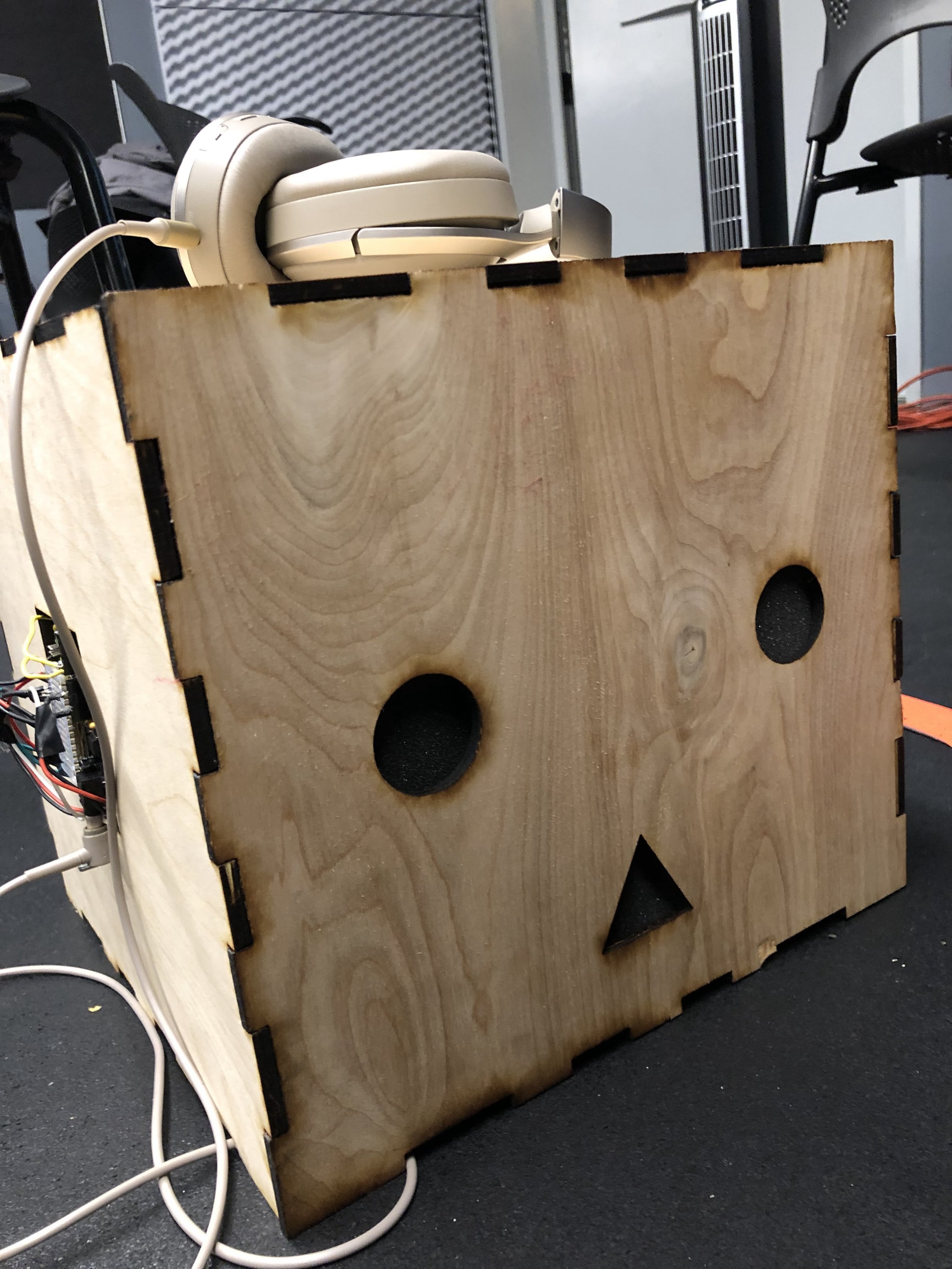

1st prototype: Echo Chamber

‘Echo chamber gear’ works as a simulation of social isolation/loneliness in order to reach the informal purpose. The gear would be a closed, dark environment inside, and people inside can only hear his/her own voice echo instead of outside sounds. The unpleasant experience could only stop by he/her taking off the gear or somebody else touch the touch sensor outside because I think social isolation/loneliness could only be overcome on one's own initiative or being helped by the community.

For the first prototype, I decided to test if the concept could really help people feel isolated and lonely without using any circuit. So, I used a paper box as the gear and Max MSP to create echo sound. Below is a short demo video:

2nd Prototype: Voice Changer

Another idea that came to me is since a lot of people become socially isolated because of their personal situations including financial status, appearance, etc, so what if I created a wearable that can fuzz up their identities?

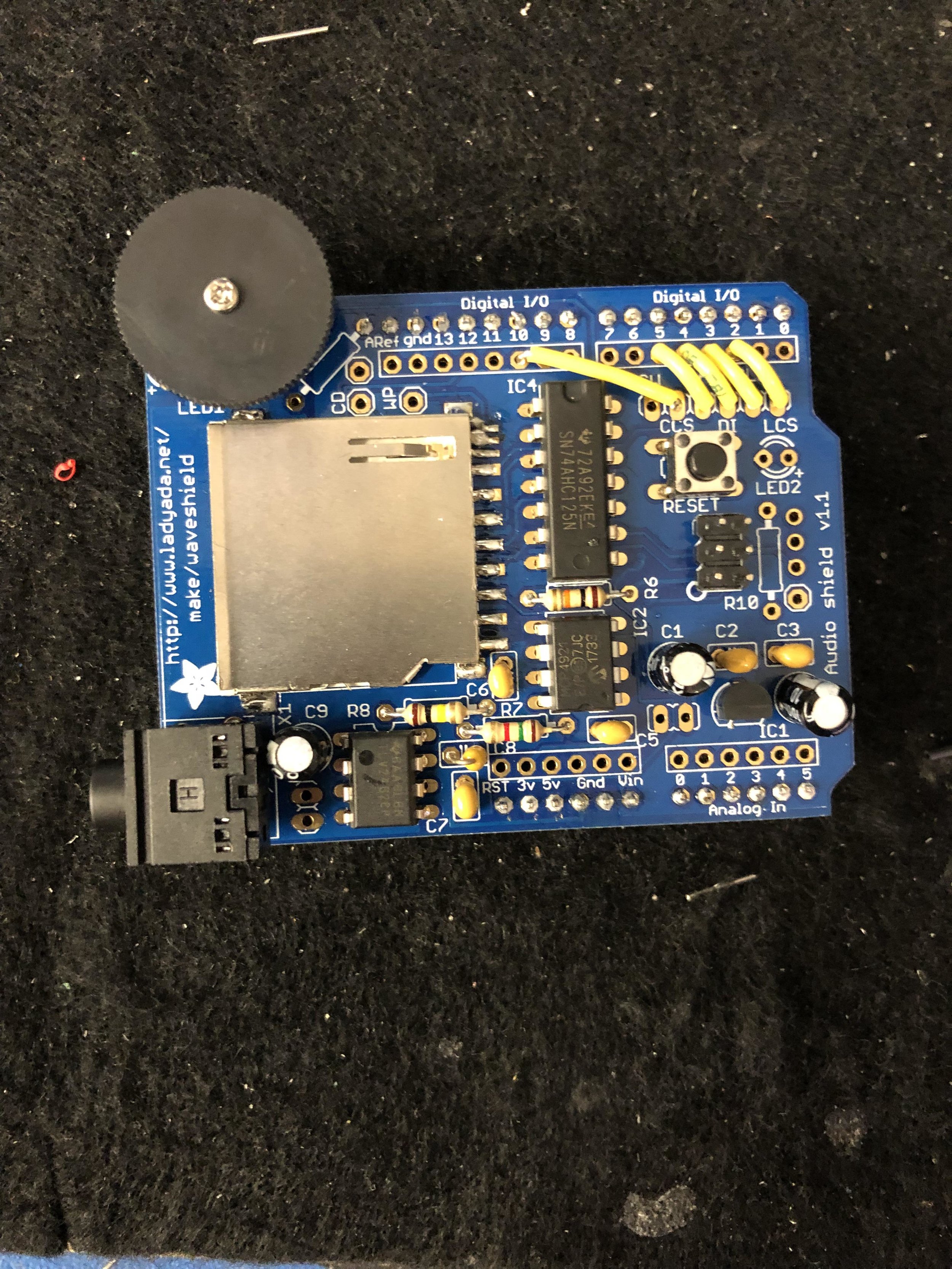

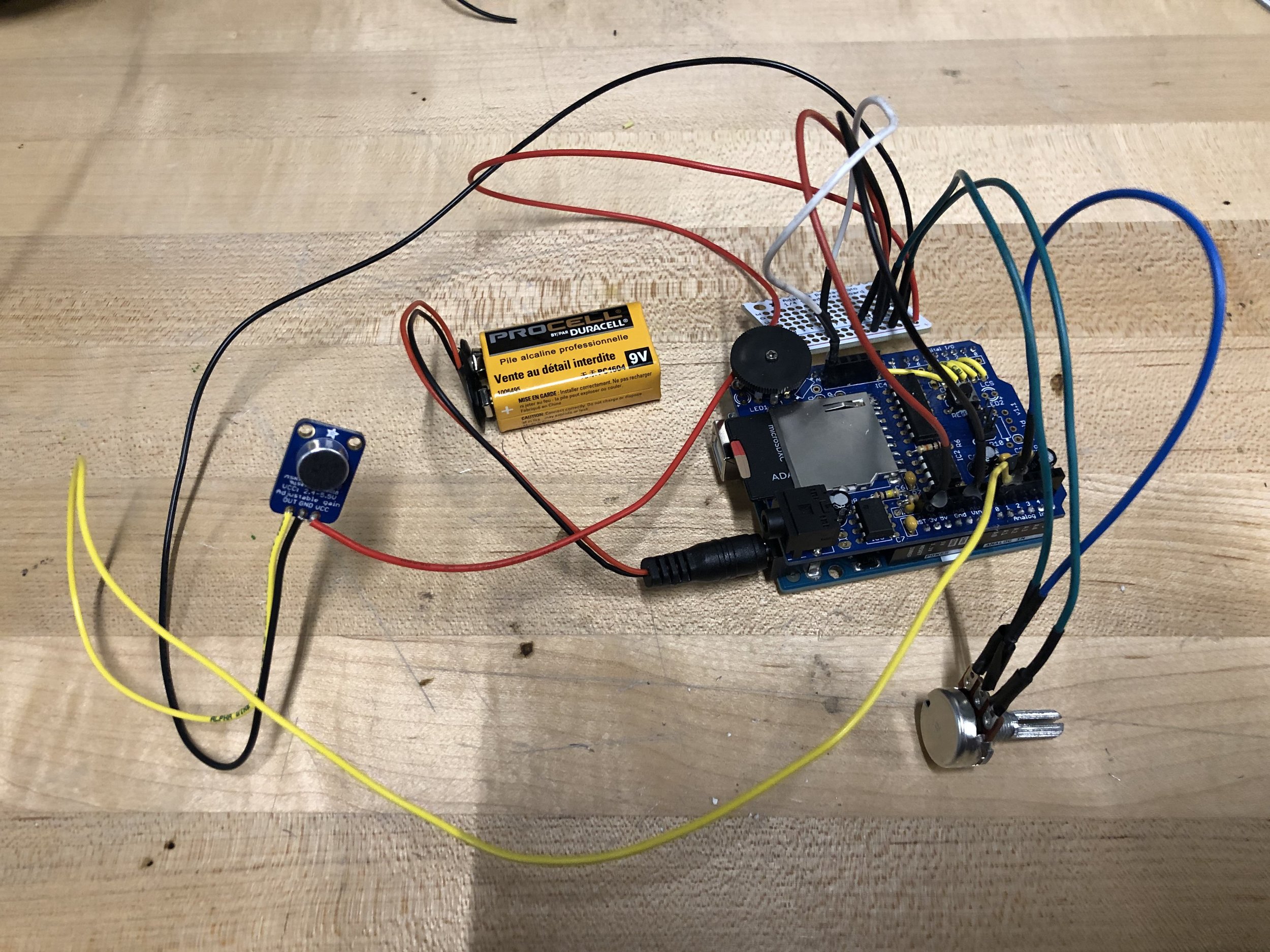

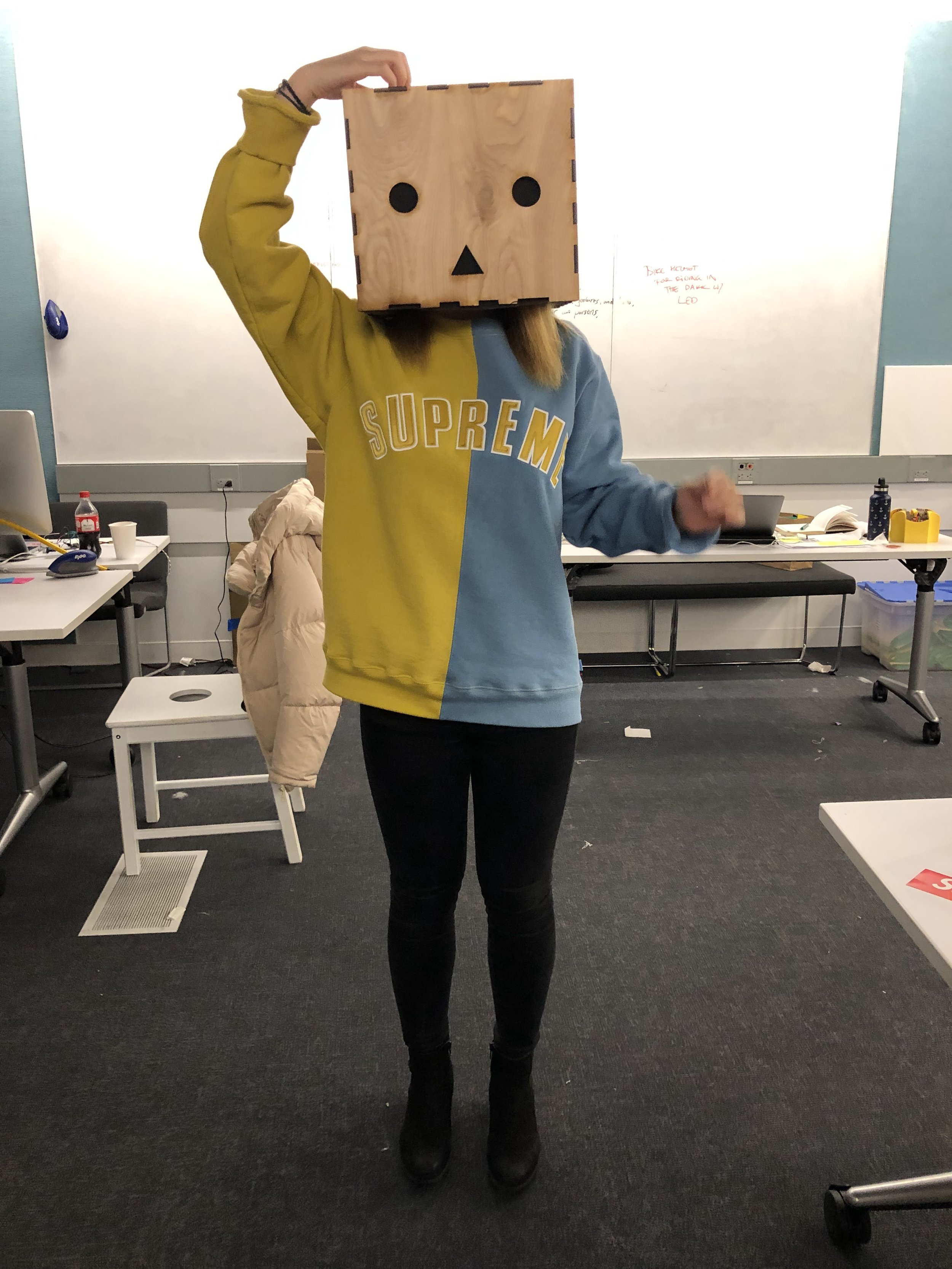

Basically, I used the same core components in my prototype one. I used the adafruit wav shield with Arduino to build a voice changer. I also laser-cut a wood box and put all the electronic parts inside it. I designed the box to have a cute face because I assume that will encourage people to try it and let other people willing to interact with the person inside.

I invited multiple people to try them on and the process was really fun. People were willing to put it on, talk and act as an anonymous person. But for now, I still not sure if it will give isolated people the courage to go out and connect with people. I need more people to test it out in the future.

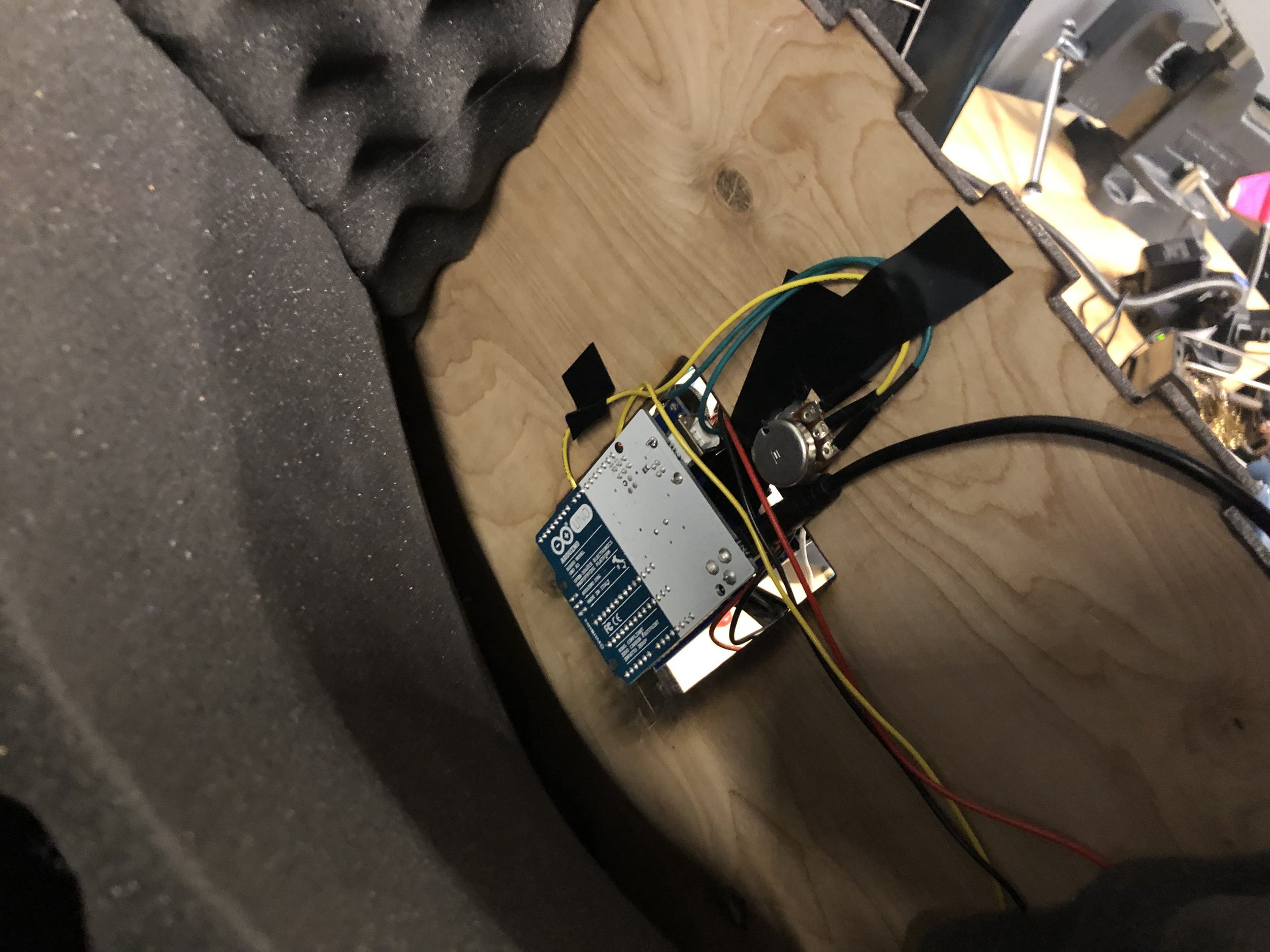

SOLDERING PROCESSS

After I get the wav shield, I started to soldering all the components, which is a lot to do. The before and after pictures are attached below.

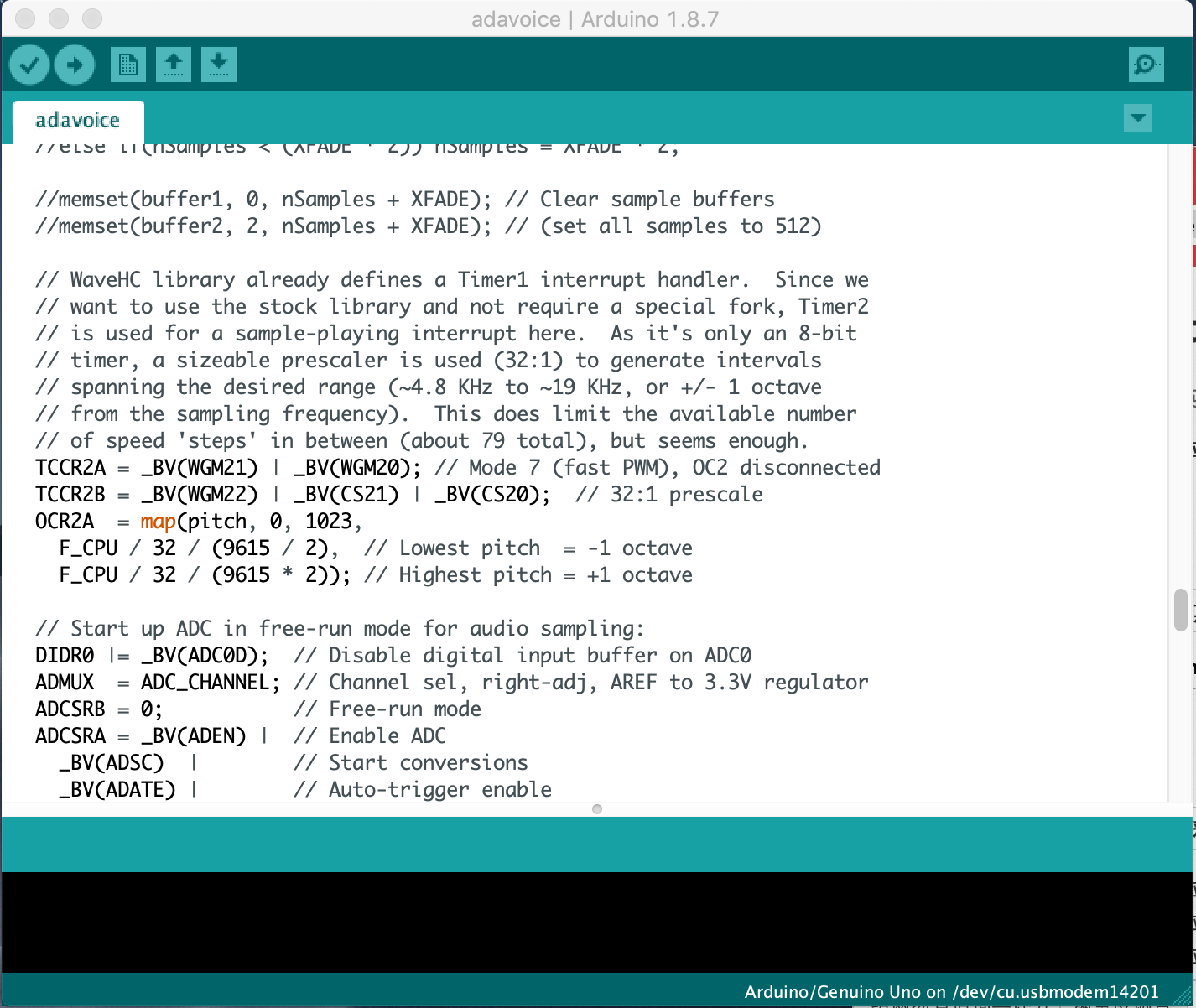

PLAY SOUND FILES

Credit to lady ada on Adafruit for providing this tutorial: https://learn.adafruit.com/adafruit-wave-shield-audio-shield-for-arduino.

The first test I did is running through the most basic function of the wav shield which is playing sound from the SD card. One thing that needs to mention is that the wav shield can only play PCM 16-bit Mono WAV files at a 22KHz sample rate, so any sound files that have different settings from this need to be converted. I used Audacity to that. The result is all the sounds play perfectly in the loop and I can use the potentiometer to adjust sound volume in real-time.

See the video below:

VOICE CHANGER

Credit to Phillip Burgess on Adafruit providing this tutorial: https://learn.adafruit.com/wave-shield-voice-changer/overview

I used a breadboard to hook everything up. Basically, the Arduino code will get the pitch of every sound voice and change and map the pitch of voice output from 0 to 1023.

It worked as shown in the video:

But the constraint here is the potentiometer that controls the pitch can’t work in real-time because Arduino and wav shield can’t read the sound files and change the pitch together, so I change the pitch by turning the potentiometer, I have to press the reset button.

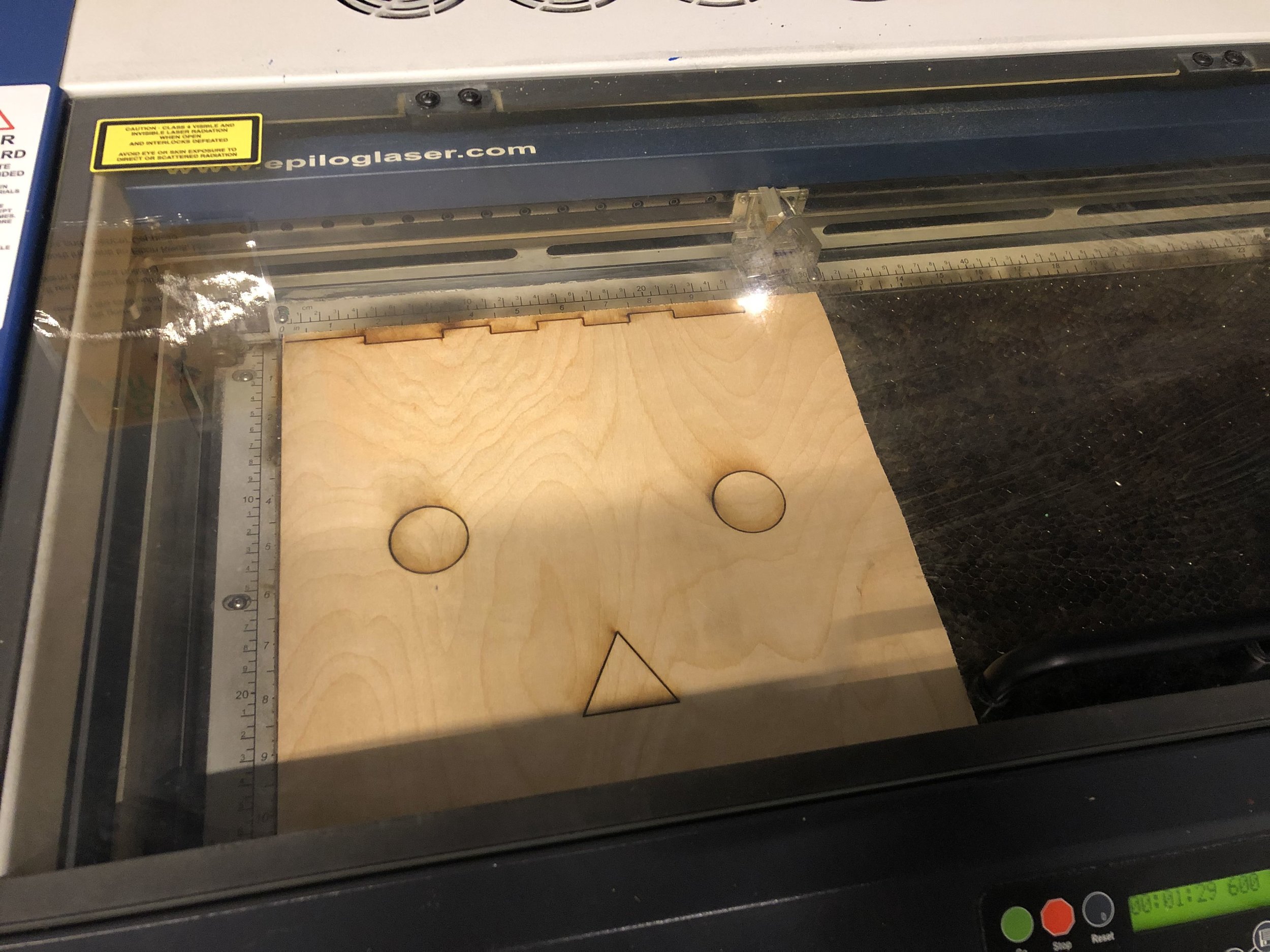

LASER CUT

Here comes the wearable part. I designed the box to be a cute robot avatar which I think could let other people be willing to interact with you. I put acoustic foams inside in order to isolate sound as well as provide a soft surface to wear.

In order to make it portable, I soldered the wires on a PCB board and made it power supplied by a 9V battery. Luckily the battery can provide enough power to both the Arduino and the wave shield.

Then I cut a hole on the side of the box to mount the circuit part on the edge. It looks kinda nice from the outside and inside. I left the wav shield exposed because I have to press the small reset button. I think there should be a better way to cover the shield which I could do later.

User Test

I had multiple people try on my voice box and we were having fun inside.

NEXT STEP

My next step would be to find more people for my survey and talk to them, especially people who experienced social isolation or are experiencing it deeply. I’m trying to reach some people on Facebook groups, meetups, and organizations. I think that’s one of the most difficult parts of doing the project that I can’t easily reach somebody who’s very socially isolated. I’m also trying to interview more experts in this field including sociologists, psychologists, and therapists. I think talking to a specialist in this field would be useful for me to get better insights and maybe help me find someone to talk to.

Meanwhile, I will keep developing my prototype: echo chamber, do user testing, and do iterations after I get feedback from them. I would also love to do some cultural probes experiment when I have time. I think it would be a really good way to understand people better.

Power Running Suit

Wearable

An eco-friendly way to utilize the kinetic energy the human body generated by walking or running and transform it into the power of lightning using the mechanic of shake flashlight.

Technology

Circuit / Soldering / Sewing

Time

2018

Mechanic

If we rotate a wire loop in a magnetic field, the field will induce an electric current in the wire.

While we walk or run, our lower body will keep moving back and forth, which can be used to keep the magnet moving in the coil. I tried to figure out which part of our lower body moves the most.

Ideally, the coil should be tied to the shank or foot. The size should be designed small enough to fit on the body. There will be LEDs on the side of the leg.

In order to test which part works better and know the inner structure of shake flashlight, I bought one on eBay and tried to tie it to different part of body. It turns out tying it to one of the foot would be the most efficient solution.

1st Prototype

Materials

Coil (Wire & Pipe)

Magnet

LED lights

Diode bridge

Capacitor

Resistor

The first thing i did was to make the coil. I tried to utilize some materials I already had, so I made the pipe by wrapping up a plastic sheet and used some electric wires to do the coil. I put both the sphere and disc magnet inside. However, when I shake the coil, the LED connected didn’t light up.

The problem might be I used the wrong type of wire, I didn’t have enough rounds of wires or the magnet was not strong enough. In order to troubleshoot, I bought some new supplies online and tried to build the second prototype.

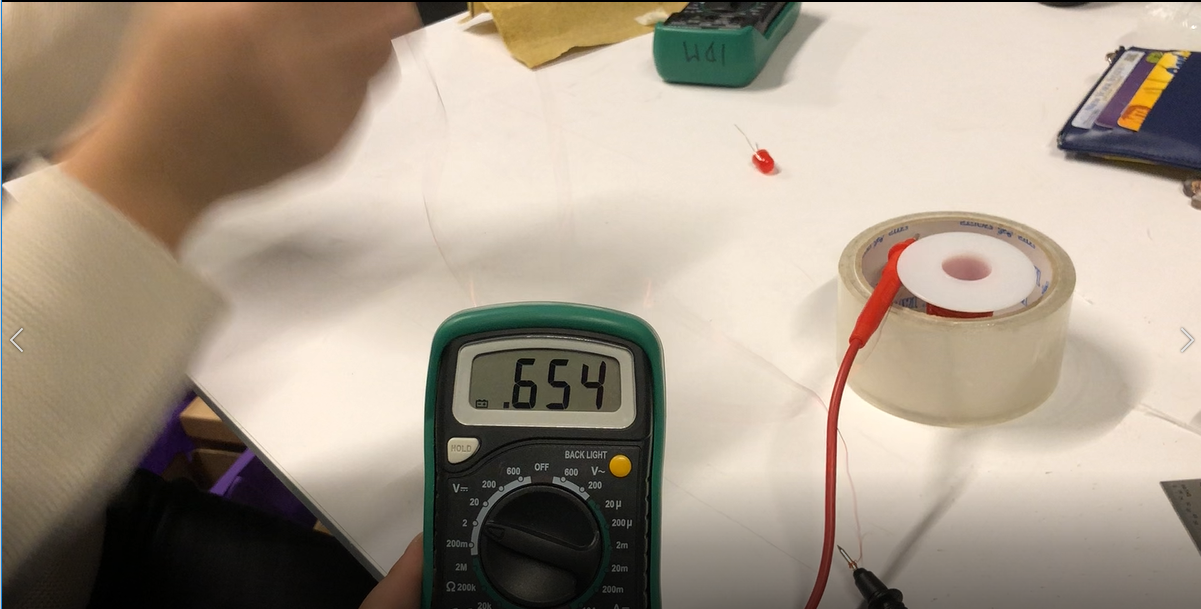

2nd Prototype

For the second prototype, I used enameled copper magnet wires (Remington Industries 30SNSP.125 Magnet Wire, Enameled Copper Wire Wound, 30 AWG, 2 oz, 402' Length, 0.0108" Diameter, Red) which is very thin and I wrapped it hundreds of times over the center of the coil. I also used some super strong magnets (10Pc Super Strong N42 Neodymium Magnet 1.26" x 1/8" W/3m Adhesive NdFeB Discs) to get a strong magnetic field. I used a multimeter to test if there was power generated or not.

It turned out I got some power!

Then I added the circuit to breadboard. This diode bridge was used to take the alternating electrical output of the coil and get it flowing in one direction. I used four single diode to build the bridge. The capacitor was used to store some electricity.

The second prototype worked but was not steady enough to become a wearable and do test on human bodies.

Final Prototype

In order make it steady and smaller the size, i decided to use a double design for the coil, which means adding an extra acrylic tube outside the coil. Meanwhile, I used a smaller PCB board to solid all the circuit and sewed it on a sport pant.

The main problem I faced was how to tie the coil onto my feet. I haven’t found the best solution yet so I just used some rope to do that. I worked fine, but I’m still looking for a better solution now.