Craft

Inclusive VR Painting Solution | Inclusive UX Design

TECHNOLOGY: UE4 / Oculus Rift / Sketch

TEAM MEMBER: Shimin Gu / Cherisha Agarwal / Joanna Yen / Pratik Jain /Raksha Ravimohan / Srishti Kush

ROLE: Technical Director / UX Researcher

Designing for every person means embracing human diversity as it relates to the nuances in the way we interact with ourselves, each other, objects, and, environments. Designing for inclusivity opens up our experiences and reflects how people adapt to the world around them. What does this look like in the evolving deskless workplace? By saying deskless workplace, we mean people who aren't constrained to one traditional or conventional office setting.

Goal: Design a product, service, or solution to solve for exclusion in a deskless workplace.

Since we are all designers and we are in a program that involves various artists, we connected with the idea of solving for artists with limited hand mobility and trying to create content in VR. Our professor — Dana Karwas and Nick Katsivelos from Microsoft encouraged us to work in this area since not much has been done.

Initial Research

How might we build a multi-modal tool for people with limited mobility in their arms to create art in Virtual Reality?

Approaching this idea was not an easy task and it involved us talking to a lot of designers at IDM and understanding their workflow. We spoke to them about how they use software and the potential problems that people with limited hand mobility would run into. We were very fascinated by the idea of creating a multi-modal application that uses eye-tracking and voice commands to enable people to draw in VR.

To dive deep into the technology and understand its use, we read a few papers listed here and got great insights from professors at NYU like Dana Karwas, Todd Bryant, Claire K Volpe, experts in UX research like Serap Yigit Elliot from Google, and Erica Wayne from Tobii. Every person we spoke to gave us more insights to tackle the challenge at hand from a user experience, VR technology, and accessibility perspective.

Interview

We had conversations with 115 individuals to validate our assumptions. We tested our prototype with potential users who face mobility issues. We also spoke to accessibility experts and disability centers to get a sense of how they would invest in our product to benefit their community.

PEOPLE WITH DISABILITIES

Paralysis

Cerebral Palsy

Multiple Sclerosis

Spinal Cord Injury

ORGANIZATIONS

Hospitals

Senior Homes

Disability Centers

Accessibility Centers

EXPERTS

VR Prototypers

UX Designers

Accessibility Experts

Therapists

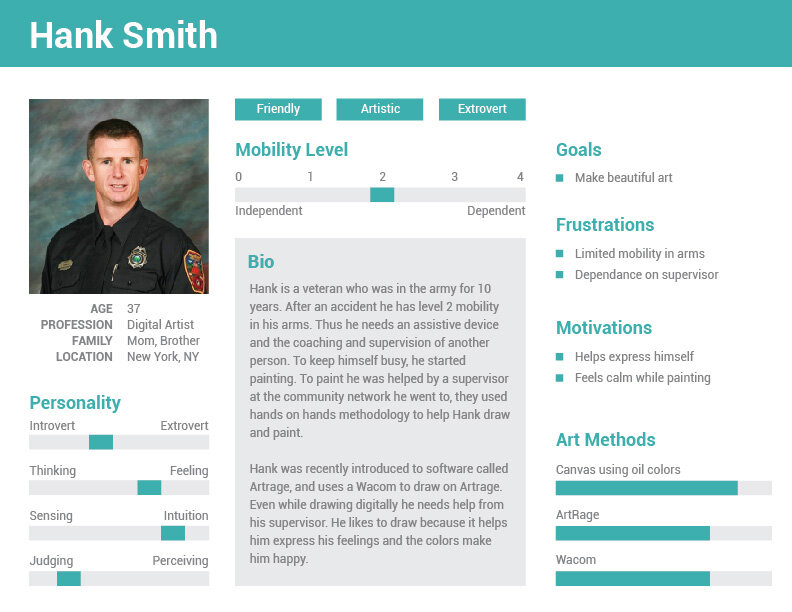

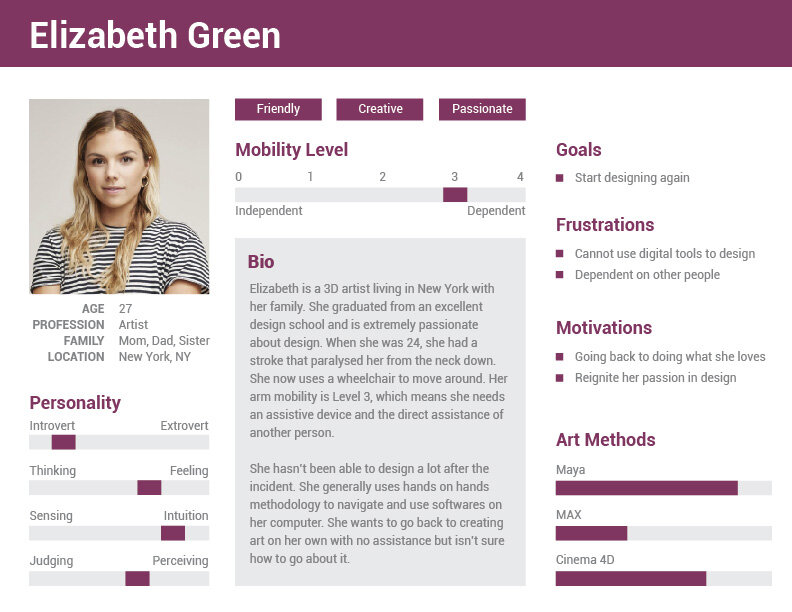

User Persona and journey

Low-fi Prototype

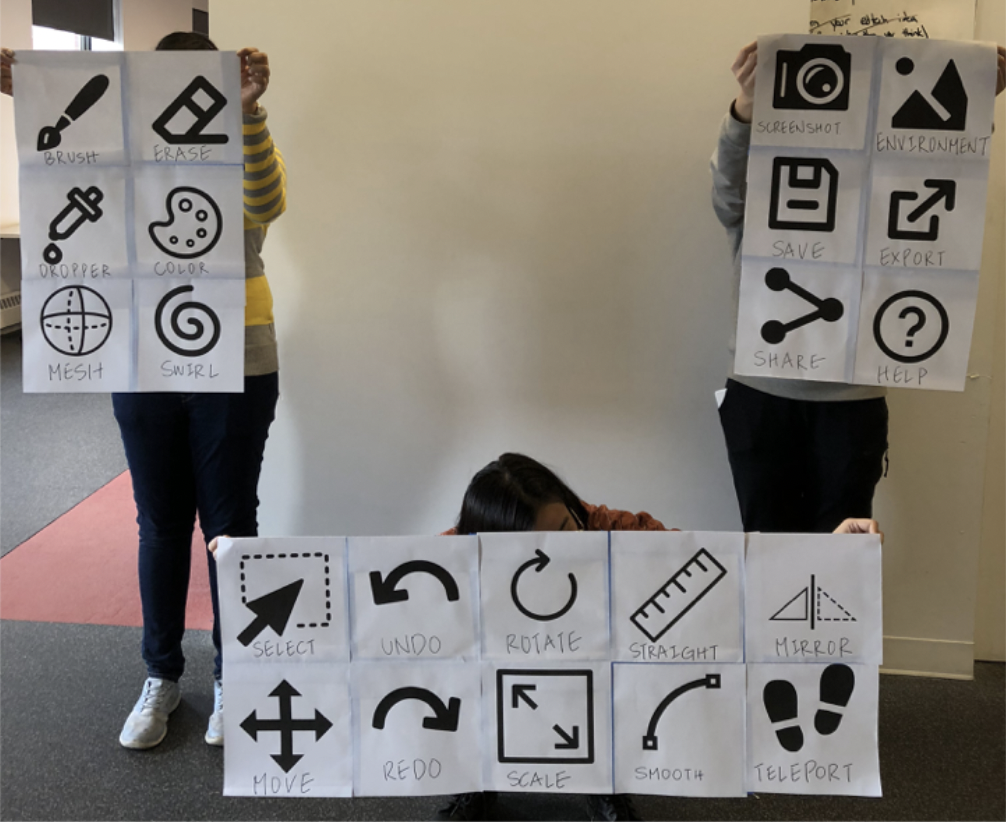

After extensive research, we decided to get our hands dirty and build our very first prototype. How do we make a prototype for an art tool in VR? We figured out that the best way to convey our interaction is through a role play video. We did some rapid prototyping using some paper, sharpies, and clips to bring our interface to life.

High-fi Prototype

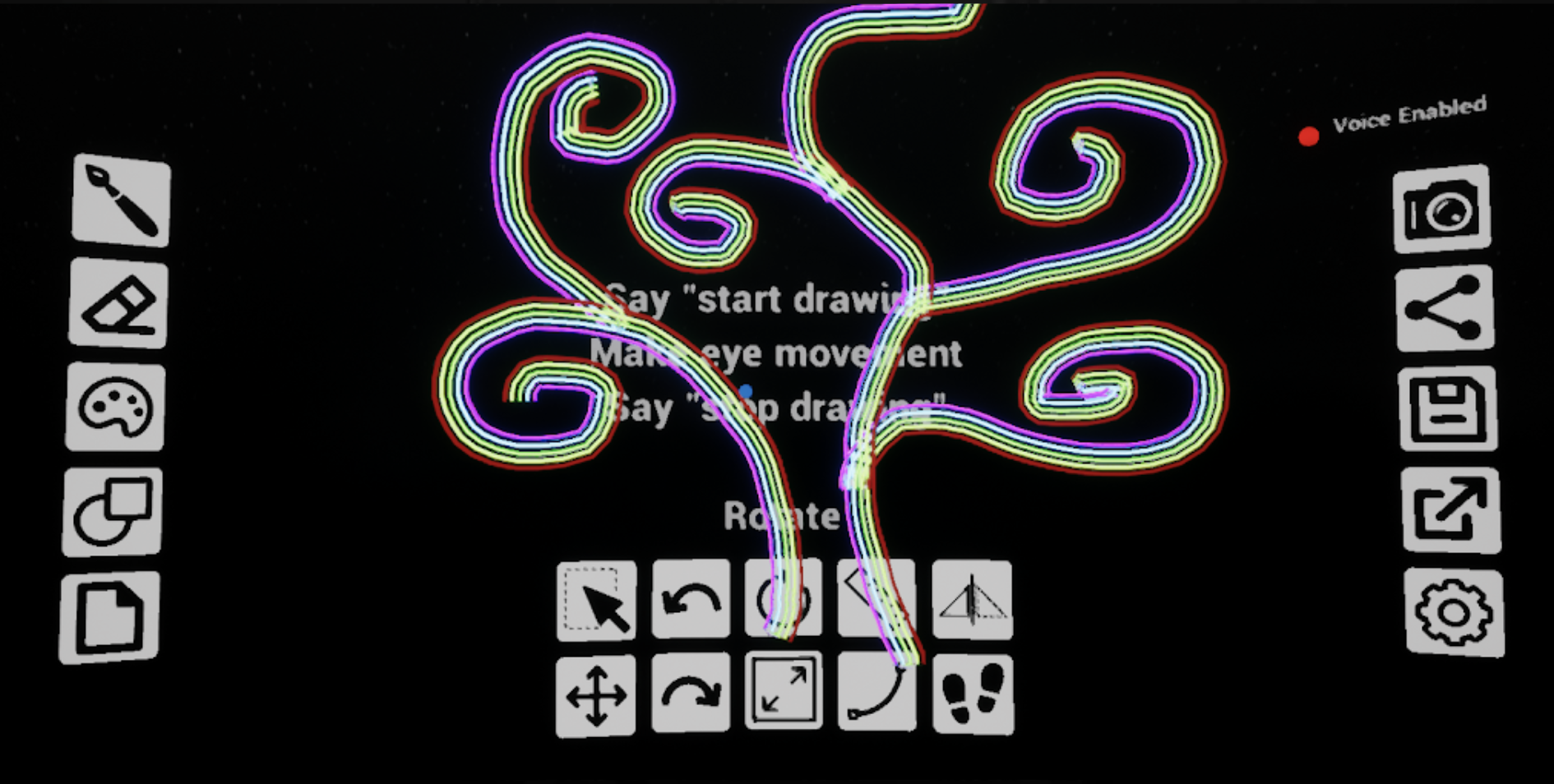

The final prototype was done using Unreal and had the functionality for drawing using head movements, changing the stroke, changing the environment, and teleporting.

The most basic function of an eye-tracking painting tool in virtual reality was realized in our prototype by using head movements. The user could choose tools, paint, teleport, and change the environment with our final prototype but it was still constrained by some technical limitations. A few functions like erase, undo, and redo could not be realized by Unreal for now but we hope we can make them work by using other software and hardware. We also hope to look into the technology to enable eye tracking and make it possible to select the tools using the gaze movements to draw. In our current prototype, the voice instructions are manually monitored. We would like to include this functionality as well to make a multi-modal solution for our users.

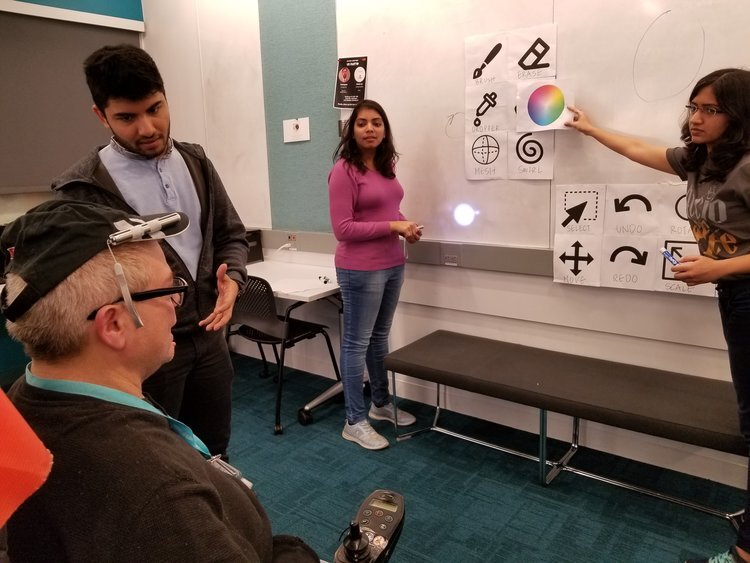

User Test

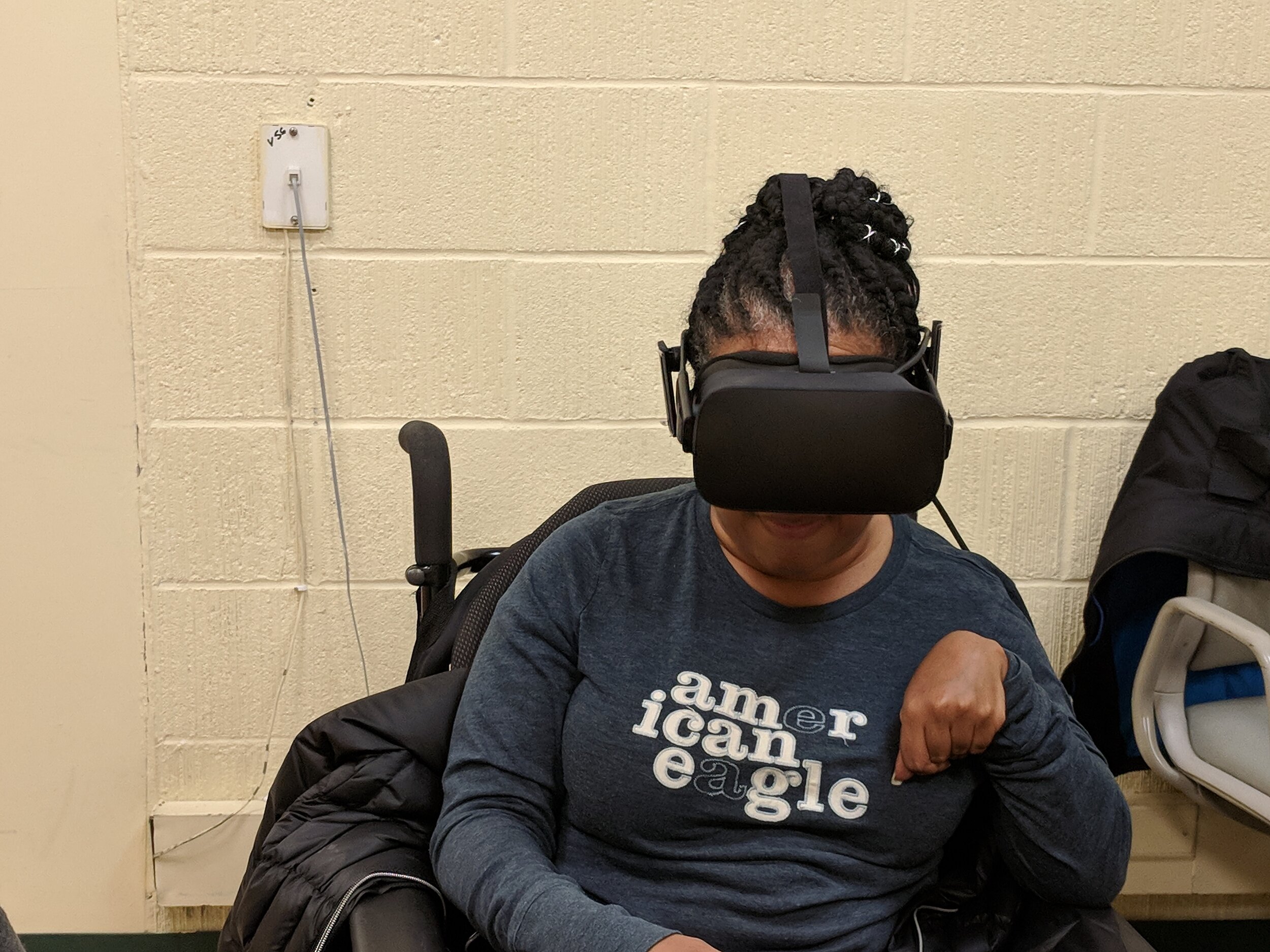

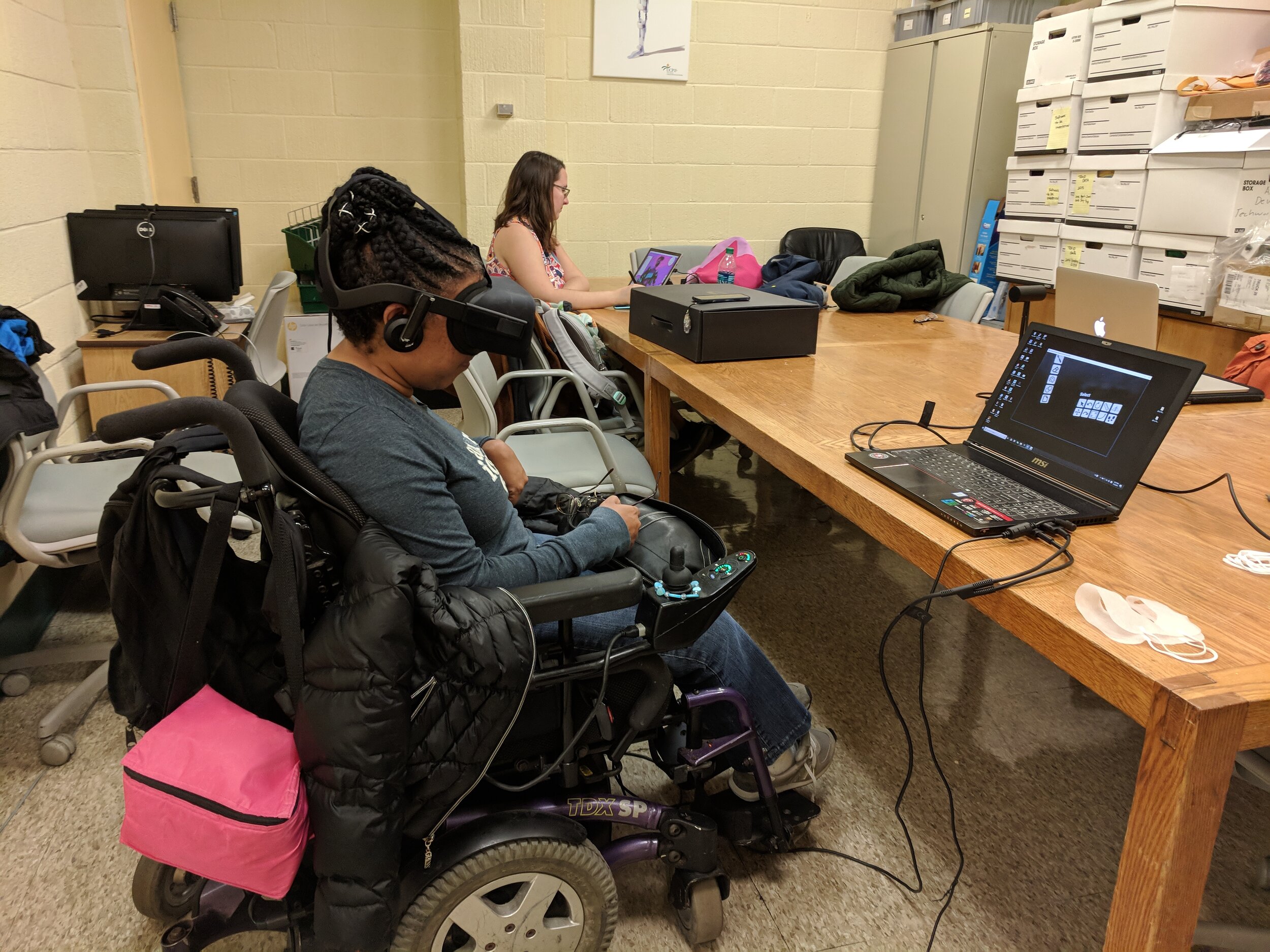

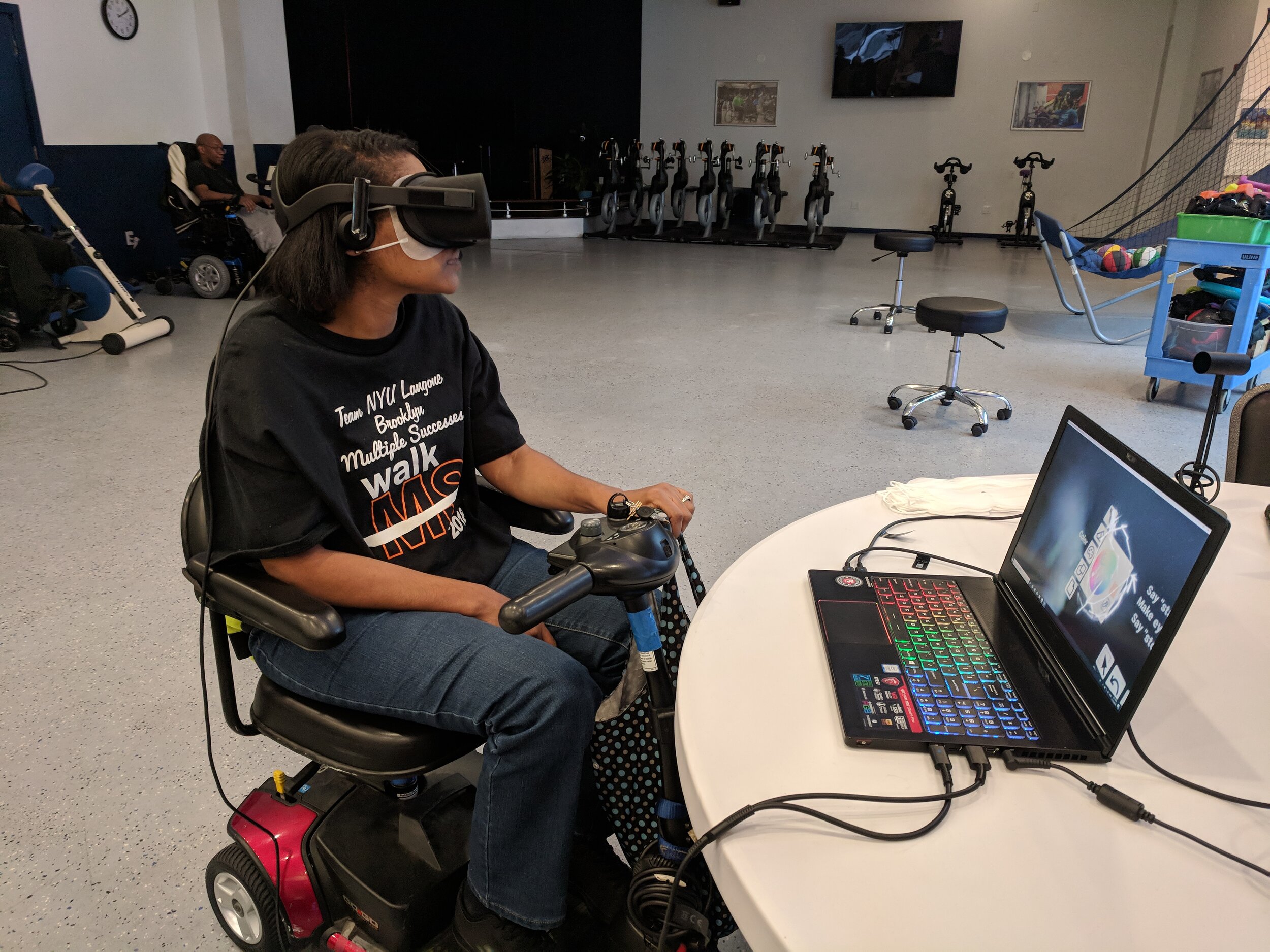

Next, we went out to meet potential users from the Adapt community. Adapt formerly known as — United Cerebral Palsy of New York City, is a community network is a leading non-profit organization in providing cutting-edge programs and services that improve the quality of life for people with disabilities. There we got to meet Carmen, Eileen, and Chris who were very interested in painting and loved the idea of drawing in VR. This kind of experience was new to them and to our surprise, we got good feedback from them. All three of them expressed interest in trying out a tool that enabled them to draw using eye movements and voice commands.

Paper Prototype and User Test

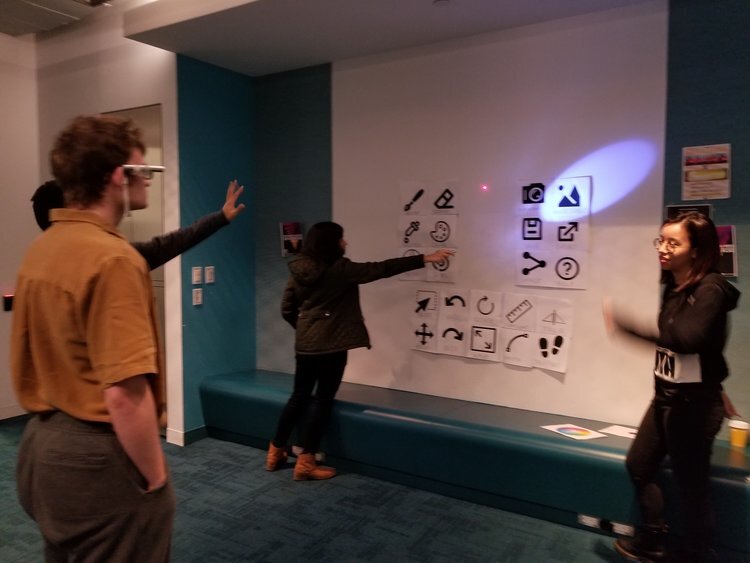

Once we got insights and a whole lot of motivation from our users, we decided to give a structure to our interface. This time, we printed our tools on paper and stuck them together in an organized way. We used a laser pointer as the “eye tracker” and we were all ready for our second round of user testing. The whole process of prototyping for VR was very interesting and this process made us think clearly about details of the interactions and potential issues.

Our second round of user testing opened us to two kinds of scenarios based on prior experience. The users who had experience with using design software could complete the tasks well and pointed potential issues with the concept. Some of the issues were with figuring out the z-axis, feedback for interactions, adding stamps, etc. The users from the ADAPT community who did not have prior experience wanted the tools to be less ambiguous and some of the tools felt unnecessary.

Taking cues and learning from responses, we went to make the hi-fidelity prototype using the Unreal engine. Unreal is a good tool to build Virtual Reality content. To make a working prototype, there are several tasks we needed to accomplish which are as explained below: